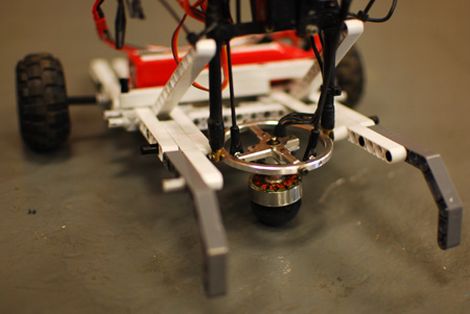

Bradley University grad student [Curtis Boirum] has built a robot which uses quite a unique drive system, one we’re guessing you have never seen before. The robot uses a single motor to drive its hemispherical omnidirectional gimbaled wheel, propelling it across the floor at amazing speeds with uncanny agility.

The robot uses a simple two axis gimbal for movement, which houses a small brushless RC airplane motor. The motor spins a rubber wheel at high speeds, which propels the robot in any direction at the flick of a switch, thanks to a pair of RC servos. When the servos tilt the gimbal, they change which side of the wheel is touching the ground as well as the gear reduction, eliminating the need for a mechanical transmission or traditional steering mechanism.

While he originally thought that he had invented the concept, [Curtis] found that this technology was nearly 100 years old, but that most people had forgotten about it. We’re pretty sure people will remember it this time around. How could you not, after watching the demo video we have embedded below?

We think it’s a great concept, and we can’t wait to see what other robot builders do with this technology.

[via Gizmodo]

Continue reading “Amazing Hemispherical Omnidirectional Gimbaled Wheel Robot”