We make no claims to be an expert on anything, but we do know that rule number one of working with big, expensive, mission-critical equipment is: Don’t break the big, expensive, mission-critical equipment. Unfortunately, though, that’s just what happened to the Deep Space Network’s 70-meter dish antenna at Goldstone, California. NASA announced the outage this week, but the accident that damaged the dish occurred much earlier, in mid-September. DSS-14, as the antenna is known, is a vital part of the Deep Space Network, which uses huge antennas at three sites (Goldstone, Madrid, and Canberra) to stay in touch with satellites and probes from the Moon to the edge of the solar system. The three sites are located roughly 120 degrees apart on the globe, which gives the network full coverage of the sky regardless of the local time.

training20 Articles

Training A Self-Driving Kart

There are certain tasks that humans perform every day that are notoriously difficult for computers to figure out. Identifying objects in pictures, for example, was something that seems fairly straightforward but was only done by computers with any semblance of accuracy in the last few years. Even then, it can’t be done without huge amounts of computing resources. Similarly, driving a car is a surprisingly complex task that even companies promising full self-driving vehicles haven’t been able to deliver despite working on the problem for over a decade now. [Austin] demonstrates this difficulty in his latest project, which adds self-driving capabilities to a small go-kart.

[Austin] had been working on this project at the local park but grew tired of packing up all his gear when he wanted to work on his machine-learning algorithms. So he took all the self-driving equipment off of the first kart and incorporated it into a smaller kart with a very small turning radius so he could develop it in his shop.

He laid down some tape on the floor to create the track and then set up the vehicle to learn how to drive by watching and gathering data. The model is trained with a convolutional neural network and this data. The only inputs that the model gets are images from cameras at the front of the kart. At first, it could only change the steering angle, with [Austin] controlling the throttle to prevent crashes. Eventually, he gave it control of the throttle as well, which behaves well except at the fastest speeds.

There were plenty of challenges along the way, especially when compared to the models trained at the park; [Austin] correctly theorized that the cause of the hardship in the park was a lack of contrast at the boundary between the track and any out-of-bounds areas. With a few tweaks to the track, as well as adding some wide-angle lenses to his cameras, he was able to get a model that works fairly well. Getting started on a project like this doesn’t have as high of a barrier to entry as one might imagine, either. Take a look at this comprehensive open-source Python library for self-driving projects. If you want to start smaller, perhaps don’t start with a self-driving kart.

Maker Skill Trees Help You Level Up Your Craft

Hacking and making are great fun due to their open ended nature, but being able to try anything can make the task of selecting your next project daunting. [Steph Piper] is here with her Maker Skill Trees to give you a map to leveling up your skills.

Featuring a grid of 73 hexagonal tiles per discipline, there’s plenty of inspiration for what to tackle next in your journey. The trees start with the basics at the bottom and progressively move up in difficulty as you move up the page. With over 50 trees to select from (so far), you can probably find something to help you become better at anything from 3D printing and modeling to entrepreneurship or woodworking.

Despite being spoiled for choice, if you’re disappointed there’s no tree for your particular interest (underwater basket weaving?), you can roll your own with the provided template and submit it for inclusion in the repository.

Want to get a jump on an AI Skill Tree? Try out these AI courses. Maybe you could use these to market yourself to potential employers or feel confident enough to strike out on your own?

[Thanks to Courtney for the tip!]

Continue reading “Maker Skill Trees Help You Level Up Your Craft”

AI-Powered Snore Detector Shakes The Pillow So You Won’t

If you snore, you’ll probably find out about it from someone. An elbow to the ribs courtesy of your sleepless bedmate, the kids making fun of you at breakfast, or even the lady downstairs calling the cops might give you the clear sign that you rattle the rafters, and that it’s time to do something about it. But what if your snores are a bit more subtle, or you don’t have someone to urge you to roll over? In that case, this AI-powered haptic snore detector might be worth building.

The most distinctive characteristic of snoring is, of course, its sound, and that’s exactly what [Naveen Kumar] chose as a trigger. To differentiate between snoring and other nighttime sounds, [Naveen] chose an Arduino Nicla Voice sensor board, which sports a Syntiant NDP120 deep-learning processor and a built-in MEMS microphone. To generate a model that adequately represents the full tapestry of human snores, a publicly available snoring dataset — because of course that’s a thing — was used for training. Importantly, the training data included samples of non-snoring sounds, like sirens and thunder, as well as clips of legit snoring mixed with these other sounds. The model is trained with an online tool and downloaded onto the board; when it detects the sweet sound of sawing wood three times in a row, a haptic driver board vibrates the pillow as a gentle reminder to reposition. Watch it in action in the brief video below.

Snoring is something that’s easy to make light of, but in all seriousness, it’s not something to be taken lightly. Hats off to [Naveen] for developing a tool like this, which just might let you know you’ve got a problem that bears a closer look by a professional. Although it might work better as a wearable rather than a pillow-shaker.

Continue reading “AI-Powered Snore Detector Shakes The Pillow So You Won’t”

Making Medical Simulators Less Expensive With 3D Printing And Silicone

Medical training simulators are expensive, but important, pieces of equipment. [Decent Simulators] is designing simulators that can easily be replicated using Fused Deposition Modeling (FDM) printers and silicone molds to bring the costs down.

Each iteration of the simulators is sent out for testing by paramedics and doctors around the world, and feedback is integrated into the next revision. Because the trainers are designed to be easily replicated, parts can easily be replaced or repaired which can be critical to keep personnel trained, especially in remote areas.

While not open source, some models are freely available on the [Decent Simulators] website like wound packing trainers or wound prostheses which could be great if you’re trying to get a head start on next year’s Halloween costumes. More complicated models will be on sale starting in January as either just the design files or a kit containing the files and the printed and/or silicone parts.

Interested in more medical hacks? Check out this Cyberpunk Prosthetic Eye or this Arduino Hearing Test Device.

Analog Tank Driving Simulator Patrols A Tiny Physical Landscape

How do you build a practical tank-driving simulator in the 1970s, when 3D computer-generated graphics are still just a fantasy of the future? If you’re a European tanker school, the solution is to use a large CNC machine to drive a camera around a miniature terrain model (German, translated). In the video after the break, [Tom Scott] takes it for a test drive.

Developed in France, the simulator provided a safer and more cost-effective way for teaching new trainees the basics of driving Centurion, Leopard 2, or Panzer 68 tanks. The trainee sits in a realistic “cockpit” mounted on a hydraulically-operated motion platform, with a TV screen in front of his face, which is connected to a camera mounted on a large gantry-style CNC platform.

The camera’s lens is mounted just above a pivoting metal foot which slides across the 12 m-long terrain model and sends its angle to the hydraulic system. It will even alter the tank’s handling based on its current position on the model to simulate different surfaces like dirt, snow, or asphalt.

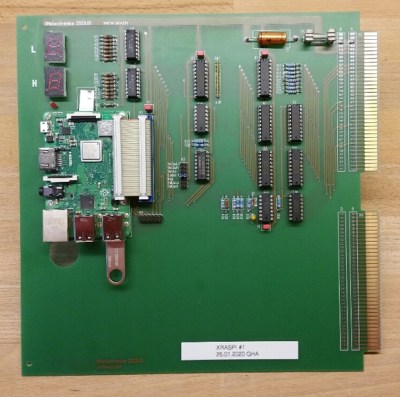

The last of these systems remained in use until 2004 at the military training center in Thun, Switzerland, before being saved by the Swiss Military Museum from being scrapped. The original 70s computer, electronics, and hydraulics finally gave out, so the museum undertook a complete refurbishment of the system to return it to working order for museum visitors. It was kept as original as possible, but parts for the original computer could not be found, so it was replaced with a Raspberry Pi and custom interface board.

Over three decades, these simulators probably trained a few thousand tank drivers, and even with limited technology did an excellent job of preparing trainees for the real thing. Besides providing training for operators, drivers and pilots, simulators are also just plain fun. We’ve seen some impressive home built simulator including a A-10 Warthog, an F-15 sim built from an actual wreckage, and even a starship’s bridge.

Continue reading “Analog Tank Driving Simulator Patrols A Tiny Physical Landscape”

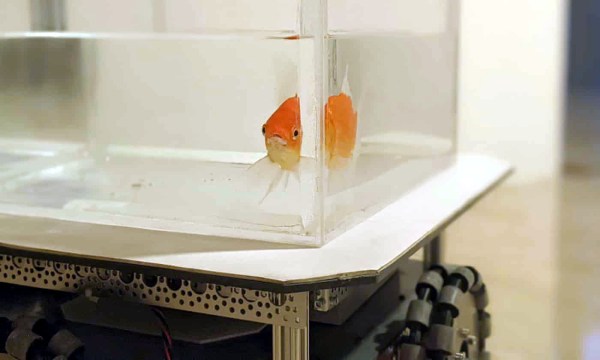

“So Long,” Said All The Tank-Driving Fish

Though some of us are heavily assisted by smart phone apps and delivery, humans don’t need GPS to find food. We know where the fridge is. The grocery store. The drive-thru. And we don’t really need a map to find shelter, in the sense that shelter is easily identifiable in a storm. You might say that our most important navigation skills are innate, at least when we’re within our normal environment. Drop us in another city and we can probably still identify viable overhangs, cafes, and food stalls.

The question is, do these navigational skills vary by species or environment? Or are the tools necessary to forage for food, meet mates, and seek shelter more universal? To test the waters of this question, Israeli researchers built a robot car and taught six fish to navigate successfully toward a target with a food reward. This experiment is one of domain transfer methodology, which is the exploration of whether a species can perform tasks outside its natural environment. Think of all the preparation that went into Vostok and Project Mercury.

Continue reading ““So Long,” Said All The Tank-Driving Fish”