Git’s Large File System is a reasonable solution to a bit of a niche problem. How do you handle large binary files that need to go into a git repository? It might be pictures or video that is part of a project’s documentation, or even a demonstration dataset. Git-lfs’s solution is to replace the binary files with a text-based pointer to where the real file is hosted. That’s not important to understanding this vulnerability, though. The problem is that git-lfs will call the main git binary as part of its operation, and when it does so, the full path is not used. On a Unix system, that’s not a problem. The $PATH variable is used to determine where to look for binaries. When git is run, /usr/bin/git is automagically run. On a Windows system, however, executing a binary name without a path will first look in the current directory, and if a matching executable file is not found, only then will the standard locations be checked.

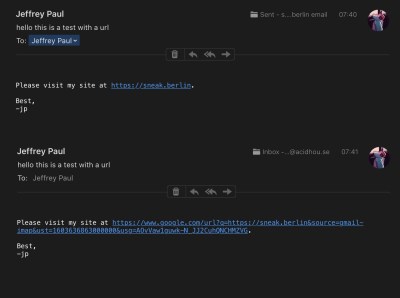

You may already see the problem. If a repository contains a git.exe, git.bat, or another git.* file that Windows thinks is executable, git-lfs will execute that file instead of the intended git binary. This means simply checking out a malicious repository gets you immediate code execution. A standard install of git for Windows, prior to 2.29.2.2, contains the vulnerable plugin by default, so go check that you’re updated!

Then remember that there’s one more wrinkle to this vulnerability. How closely do you check the contents of a git download before you run the next git command? Even with a patched git-lfs version, if you clone a malicious repository, then run any other git command, you still run the local git.* file. The real solution is pushing the local directory higher up the path chain. Continue reading “This Week In Security: Platypus, Git.bat, TCL TVs, And Lessons From Online Gaming”