Lucid dreaming is the state of becoming aware one is dreaming while still being within the dream. To what end? That awareness may allow one to influence the dream itself, and the possibilities of that are obvious and compelling enough that plenty of clever and curious people have formed some sort of interest in this direction. Now there are some indications that VR might be a useful tool in helping people achieve lucid dreaming.

The research paper (Virtual reality training of lucid dreaming) is far from laying out a conclusive roadmap, but there’s enough there to make the case that VR is at least worth a look as a serious tool in the quest for lucid dreaming.

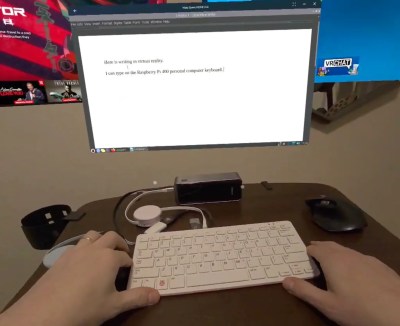

One method of using VR in this way hinges on the idea that engaging in immersive VR content can create mild dissociative experiences, and this can help guide and encourage users to perform “reality checks”. VR can help such reality checks become second nature (or at least more familiar and natural), which may help one to become aware of a dream state when it occurs.

Another method uses VR as a way to induce a mental state that is more conducive to lucid dreaming. As mentioned, engaging in immersive VR can induce mild dissociative experiences, so VR slowly guides one into a more receptive state before falling asleep. Since sleeping in VR is absolutely a thing, perhaps an enterprising hacker with a healthy curiosity in lucid dreaming might be inspired to experiment with combining them.

We’ve covered plenty of lucid dreaming hacks over the years and there’s even been serious effort at enabling communication from within a dreaming state. If you ask us, that’s something just begging to be combined with VR.