Don’t let the name of the Open-TeleVision project fool you; it’s a framework for improving telepresence and making robotic teleoperation far more intuitive than it otherwise would be. It accomplishes this in part by taking advantage of the remarkable technology packed into modern VR headsets like the Apple Vision Pro and Meta Quest. There are loads of videos on the project page, many of which demonstrate successful teleoperation across vast distances.

Teleoperation of robotic effectors typically takes some getting used to. The camera views are unusual, the limbs don’t move the same way arms do, and intuitive human things like looking around to get a sense of where everything is don’t translate well.

To address this, researches provided a user with a robot-mounted, real-time stereo video stream (through which the user can turn their head and look around normally) as well as mapping arm and hand movements to humanoid robotic counterparts. This provides the feedback to manipulate objects and perform tasks in a much more intuitive way. In short, when our eyes, bodies, and hands look and work more or less the way we expect, it turns out it’s far easier to perform tasks.

The research paper goes into detail about the different systems, but in essence, a stereo depth and RGB camera is perched with a 3D printed gimbal atop a humanoid robot frame like the Unitree H1 equipped with high dexterity hands. A VR headset takes care of displaying a real-time stereoscopic video stream and letting the user look around. Hand tracking for the user is mapped to the dexterous hands and fingers. This lets a person look at, manipulate, and handle things without in-depth training. Perhaps slower and more clumsily than they would like, but in an intuitive way all the same.

Interested in taking a closer look? The GitHub repository has the necessary code, and while most of us will never be mashing ADD TO CART on something like the Unitree H1, the reference design for a stereo camera streaming to a VR headset and mirroring head tracking with a two-motor gimbal looks like the sort of thing that would be useful for a telepresence project or two.

Continue reading “Re-imagining Telepresence With Humanoid Robots And VR Headsets”

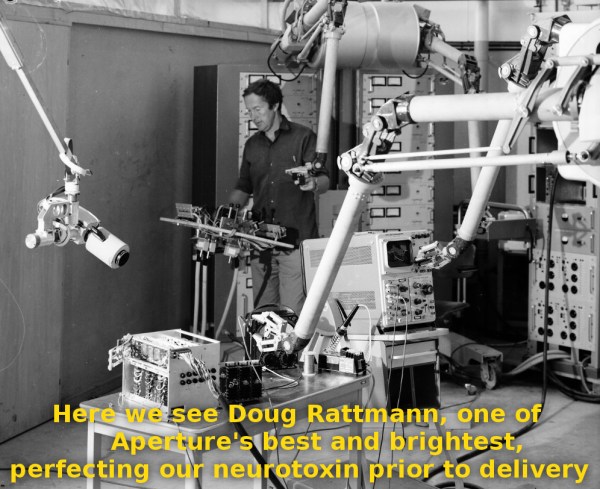

Funny captions weren’t the only thing in the comments though – the image tickled [

Funny captions weren’t the only thing in the comments though – the image tickled [