Looks like we might have been a bit premature in our dismissal last week of the Sun’s potential for throwing a temper tantrum, as that’s exactly what happened when a G1 geomagnetic storm hit the planet early last week. To be fair, the storm was very minor — aurora visible down to the latitude of Calgary isn’t terribly unusual — but the odd thing about this storm was that it sort of snuck up on us. Solar scientists first thought it was a coronal mass ejection (CME), possibly related to the “monster sunspot” that had rapidly tripled in size and was being hyped up as some kind of planet killer. But it appears this sneak attack came from another, less-studied phenomenon, a co-rotating interaction region, or CIR. These sound a bit like eddy currents in the solar wind, which can bunch up plasma that can suddenly burst forth from the sun, all without showing the usually telltale sunspots.

Then again, even people who study the Sun for a living don’t always seem to agree on what’s going on up there. Back at the beginning of Solar Cycle 25, NASA and NOAA, the National Oceanic and Atmospheric Administration, were calling for a relatively weak showing during our star’s eleven-year cycle, as recorded by the number of sunspots observed. But another model, developed by heliophysicists at the U.S. National Center for Atmospheric Research, predicted that Solar Cycle 25 could be among the strongest ever recorded. And so far, it looks like the latter group might be right. Where the NASA/NOAA model called for 37 sunspots in May of 2022, for example, the Sun actually threw up 97 — much more in line with what the NCAR model predicted. If the trend holds, the peak of the eleven-year cycle in April of 2025 might see over 200 sunspots a month.

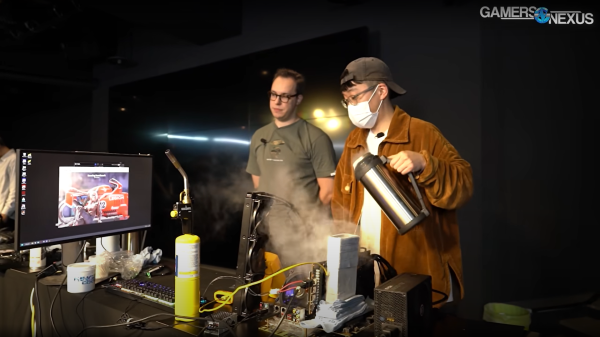

So, good news and bad news from the cryptocurrency world lately. The bad news is that cryptocurrency markets are crashing, with the flagship Bitcoin falling from its high of around $67,000 down to $20,000 or so, and looking like it might fall even further. But the good news is that’s put a bit of a crimp in the demand for NVIDIA graphics cards, as the economics of turning electricity into hashes starts to look a little less attractive. So if you’re trying to upgrade your gaming rig, that means there’ll soon be a glut of GPUs, right? Not so fast, maybe: at least one analyst has a different view, based mainly on the distribution of AMD and NVIDIA GPU chips in the market as well as how much revenue they each draw from crypto rather than from traditional uses of the chips. It’s important mainly for investors, so it doesn’t really matter to you if you’re just looking for a graphics card on the cheap.

Speaking of businesses, things are not looking too good for MakerGear. According to a banner announcement on their website, the supplier of 3D printers, parts, and accessories is scaling back operations, to the point where everything is being sold on an “as-is” basis with no returns. In a long post on “The Future of MakerGear,” founder and CEO Rick Pollack says the problem basically boils down to supply chain and COVID issues — they can’t get the parts they need to make printers. And so the company is looking for a buyer. We find this sad but understandable, and wish Rick and everyone at MakerGear the best of luck as they try to keep the lights on.

And finally, if there’s one thing Elon Musk is good at, it’s keeping his many businesses in the public eye. And so it is this week with SpaceX, which is recruiting Starlink customers to write nasty-grams to the Federal Communications Commission regarding Dish Network’s plan to gobble up a bunch of spectrum in the 12-GHz band for their 5G expansion plans. The 3,000 or so newly minted experts on spectrum allocation wrote to tell FCC commissioners how much Dish sucks, and how much they love and depend on Starlink. It looks like they may have a point — Starlink uses the lowest part of the Ku band (12 GHz – 18 GHz) for data downlinks to user terminals, along with big chunks of about half a dozen other bands. It’ll be interesting to watch this one play out.