3D graphics are made up of little more than very complicated math. With enough time, you could probably compute a ray marching by hand. Or, you could set up Excel to do it for you!

Ray marching is a form of ray tracing, where a ray is stepped along based on how close it is to the nearest surface. By taking advantage of signed distance functions, such an algorithm can be quite effective, and in some instances much more efficient than traditional ray marching algorithms. But the fact that ray marching is so mathematically well-defined is probably why [ExcelTABLE] used it to make a ray traced game in Excel.

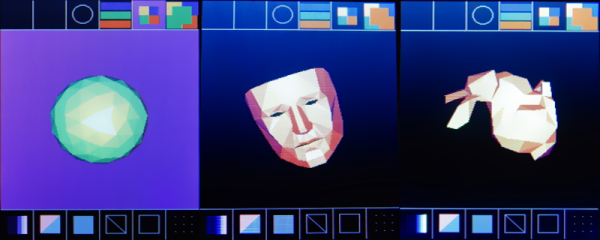

Under the hood, the ray marching works by casting a ray out from the camera and measuring its distance from a set of three-dimensional functions. If that distance is below a certain value, this is considered a surface hit. On surface hits, a simple normal shader computes pixel brightness. This is then rendered out by variable formatting in the cells of the spreadsheet.

For those of you following along at home, the tutorial should work just fine in any modern spreadsheet software, including Google Sheets and LibreOffice Calc. It also provides a great explanation of the math and concepts of ray marching, so is worth a read regardless your opinions on Excel’s status as a so-called “programming language.”

This is not the first time we have come across a ray tracing tutorial. If computer graphics are your thing, make sure to check out this ray tracing in a weekend tutorial next!

Thanks [Niklas] for the tip!

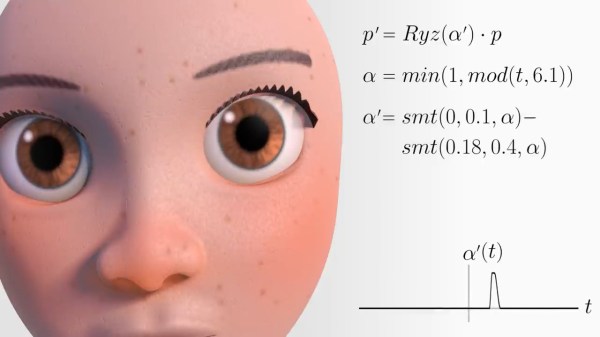

To remedy the abrupt movements, [Daniel Holden et. al] recently

To remedy the abrupt movements, [Daniel Holden et. al] recently