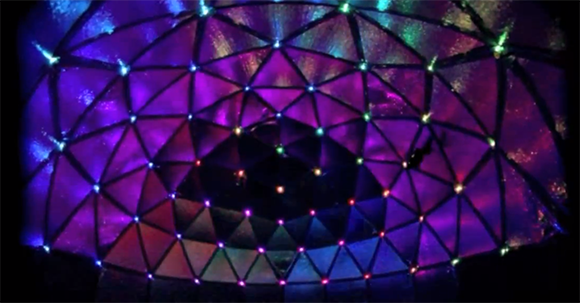

In 1979, [Nolan Bushnell] released Asteroids to the world. Now, he’s playing the game again, only this time with the help of a laser projector and a Kinect that turns anyone sitting on a stool – in this case [Nolan] himself – into everyone’s favorite vector spaceship. It’s a project for Steam Carnival, a project by [Brent Bushnell] and [Eric Gradman] that hopes to bring a modern electronic carnival to your town.

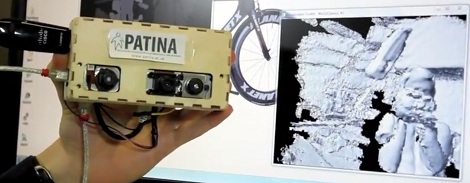

The reimagined Asteroids game was created with a laser projector to display the asteroids and ship on a floor. A Kinect tracks the user sitting and rolling on a stool while a smart phone is the triangular spaceship’s ‘fire’ button. The game is played in a 150 square foot arena, and is able to put anyone behind the cockpit of an asteroid mining triangle.

[Brent] and [Eric] hope to bring their steam carnival to LA and San Francisco next spring, but if they exceed their funding goals, they might be convinced to bring their show east of the Mississippi. We’d love to try it out by hiding behind the score like the original Asteroids and wasting several hours.

Continue reading “Human Asteroids Makes You A Vector Triangle Ship”