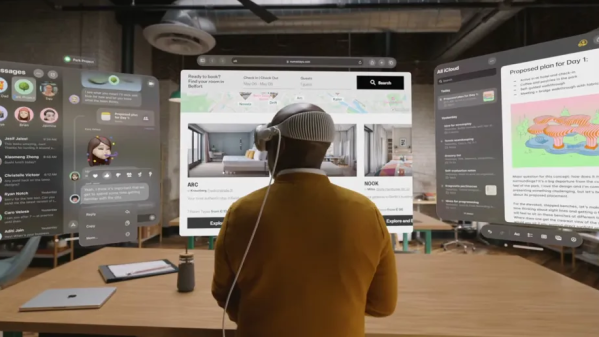

The displays inside the Apple Vision Pro have 3660 × 3200 pixels per eye, but veteran engineer [Karl Guttag]’s analysis of its subtly blurred optics reminds us that “resolution” doesn’t always translate to resolution, and how this is especially true for things like near-eye displays.

The Apple Vision Pro lacks the usual visual artifacts (like the screen door effect) which result from viewing magnified pixelated screens though optics. But [Karl] shows how this effect is in fact hiding in plain sight: Apple seems to have simply made everything just a wee bit blurry thanks to subtly out-of-focus lenses.

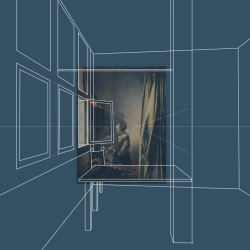

The thing is, this approach of intentionally de-focusing actually works very well for consuming visual content like movies or looking at pictures, where detail and pixel-to-pixel contrast is limited anyway.

Clever loophole, or specification shenanigans? You be the judge of that, but this really is evidence of how especially when it comes to things like VR headsets, everything is a trade-off. Improving one thing typically worsens others. In fact, it’s one of the reasons why VR monitor replacements are actually a nontrivial challenge.