What’s this? Another fabulous creation from [Niklas Roy] and [Kati Hyyppä] that combines art and electronics with our zeitgeist and a lot of recycled bits and bobs? You got it. Their workshop in eastern Berlin used to be a retail shop and has a large display window as a result. This seems perfect for a pair of artists in a pandemic, because they can communicate with the community through the things they display in the window. Most recently, it was this interactive cyborg baby we are choosing to call Cybaby.

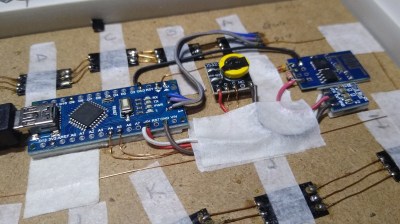

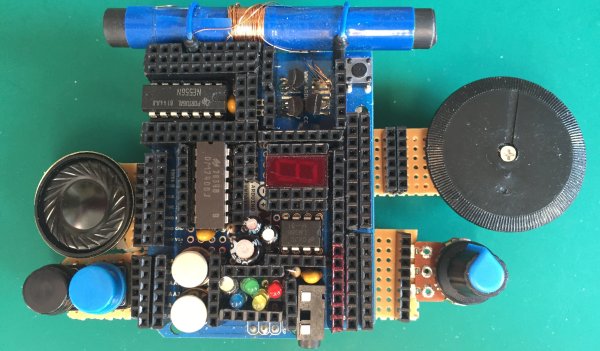

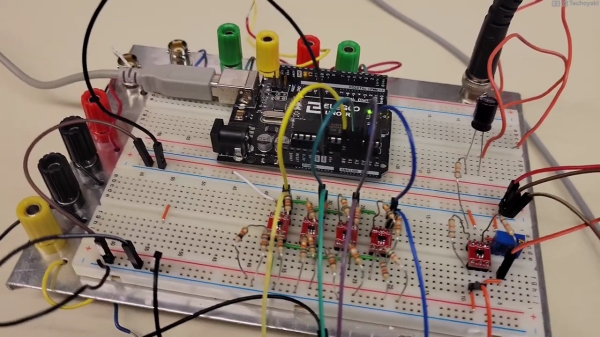

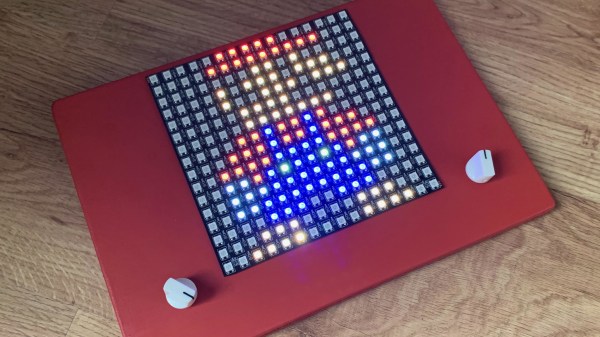

You might recognize Cybaby as one of the very hackable Robosapien robots, but with a baby doll head. (It also has a single red eye that really pulls its look together.) In the window, Cybaby comes alive and toddles around against a backdrop that grew and evolved over several weeks this spring and summer. Passersby were able to join the network and control Cybaby from outside with their smartphone to make it walk around, press various buttons that change its environment, and trigger a few sensors here and there. Robosapien has been around for about 20 years, so there is already Arduino code out there that essentially simulates its R/C signals. [Niklas] and [Kati] used a NodeMCU (ESP12-E) to send pulses to the IR input of the robot.

Back on the zany zeitgeist front, there’s a hair salon, a convenience store, and a nightclub for dancing that requires a successful trip through the testing center first (naturally). Oh, and there’s a lab next door to the nightclub that can’t be accessed by Cybaby no matter what it tries or how it cries. Check it out after the break.

There’s a dearth of Robosapien posts for some reason, so here’s what [Niklas] and [Kati] had in their window before the World of Cybaby — a really cool pen plotter that prints out messages sent by people walking by.

Continue reading “Pandemic Gives Passersby A Window On Cyborg Control”