Making a digital camera is a project that appears easy enough, but it’s one whose complexity increases depending on the level to which a designer is prepared to go. At the simplest a Raspberry Pi and camera module can be stuck in a 3D printed case, but in that case, the difficult work of getting the drivers and electronics sorted out has already been done for you.

At the other end of the scale there’s [Wenting Zhang]’s open source mirrorless digital camera project, in which the design and construction of a full-frame CCD digital camera has been taken back to first principles. To understand the scale of this task, this process employs large teams of engineers when a camera company does it, and while it’s taken a few years and the software isn’t perhaps as polished as your Sony or Canon, the fact it’s been done at all is extremely impressive.

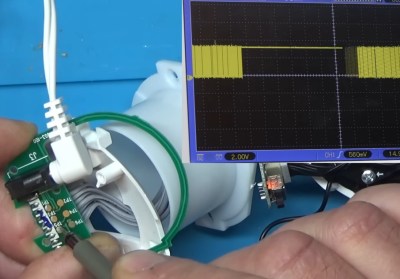

Inside is a Kodak full-frame sensor behind the Sony E-mount lens, for which all the complex CCD timing and acquisition circuitry has been implemented. The brains of the show lie in a Xilinx Zynq ARM-and-FPGA in a stack of boards with a power board and the CCD board. The controls and battery are in a grip, and a large display is on the back of the unit.

We featured an earlier version of this project last year, and this version is a much better development with something like the ergonomics, control, and interface you would expect from a modern consumer camera. The screen update is still a little slow and there are doubtless many tweaks to come, but this really feels close to being a camera you’d want to try. There’s an assembly video which we’ve placed below the break, feast your eyes on it.

Continue reading “An Open Source Mirrorless Camera You’d Want To Use”