The old gen 1 Kinect has seen a fair bit of use in the field of making 3D scans out of real world scenes. Now that Xbox 360 Kinects are winding up at yard sales and your local Goodwill, you might even have a chance to pick one up for pocket change. Until now, though, scanning objects in 3D has only been practical in a studio or workshop setting; for a mobile, portable scanner, you’d need to lug around a computer, a power supply, and it’s not really something you can fit in a back pack.

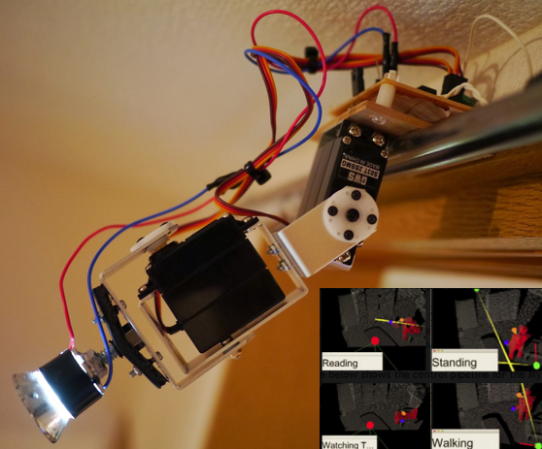

Now, finally, that may be changing. [xxorde] can now get depth data from a Kinect sensor with a Raspberry Pi. And with just about every other ARM board out there as well. It’s a kernel driver that’s small, fast, and does just one thing: turns the Kinect into a webcam that displays depth data.

Of course, a portabalized Kinect 3D scanner has been done before, but that was with an absurdly expensive Gumstix board. With a Raspi or BeagleBone Black, this driver has the beginnings of a very cheap 3D scanner that would be much more useful than the current commercial or DIY desktop scanners.