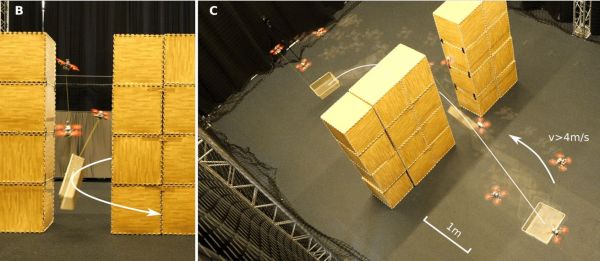

Cycloidal drives have an entrancing motion, as well as a few other advantages – high torque and efficiency, low backlash, and compactness among them. However, much as [Sergei Mishin] likes them, it can be difficult to 3D-print high-torque drives, and it’s sometimes inconvenient to have the input and output shafts in-line. When, therefore, he came across a video of an industrial three-ring reducing drive, which works on a similar principle, he naturally designed his own 3D-printable drive.

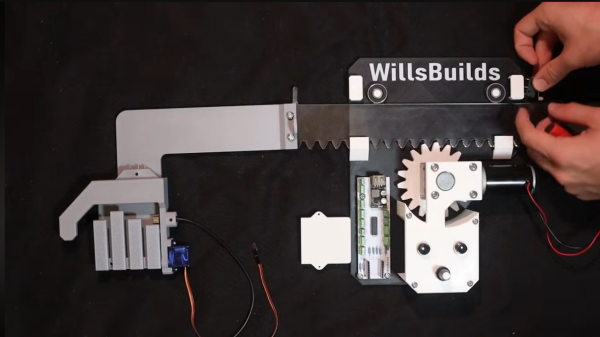

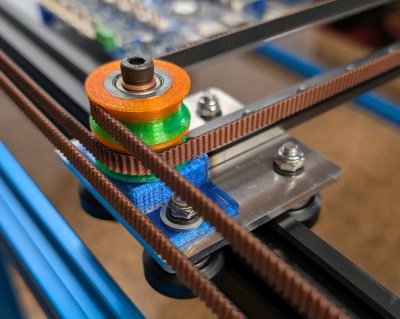

The main issue with 3D-printing a normal cycloidal drive is with the eccentrically-mounted cycloidal plate, since the pins which run through its holes need bearings to keep them from quickly wearing out the plastic plate at high torque. This puts some unfortunate constraints on the size of the drive. A three-ring drive also uses an eccentric drive shaft to cause cycloidal plates to oscillate around a set of pins, but the input and output shafts are offset so that the plates encompass both the pins and the eccentric driveshaft. This simplifies construction significantly, and also makes it possible to add more than one input or output shaft.

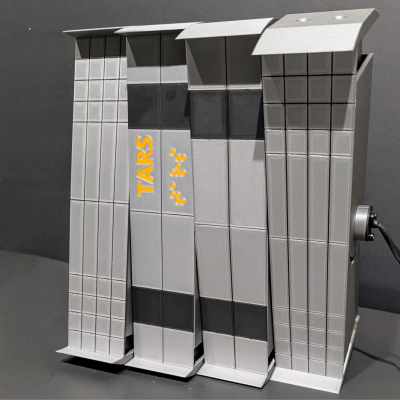

As the name indicates, these drives use three plates 120 degrees out of phase with each other; [Sergei] tried a design with only two plates 180 degrees out of phase, but since there was a point at which the plates could rotate just as easily in either direction, it jammed easily. Unlike standard cycloidal gears, these plates use epicycloidal rather than hypocycloidal profiles, since they move around the outside of the pins. [Sergei] helpfully wrote a Python script that can generate profiles, animate them, and export to DXF. The final performance of these drives will depend on their design parameters and printing material, but [Sergei] tested a 20:1 drive and reached a respectable 9.8 Newton-meters before it started skipping.

Even without this design’s advantages, it’s still possible to 3D-print a cycloidal drive, its cousin the harmonic drive, or even more exotic drive configurations. Continue reading “Designing A Simpler Cycloidal Drive”