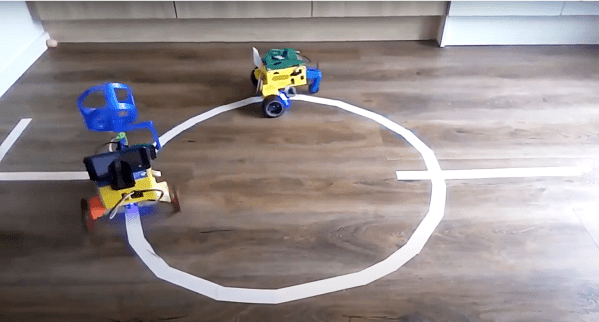

For those wishing to explore robot autonomy, there’s no better way then to learn by doing. [Greg] was in that camp, and decided to build an autonomous rover to roam his house, and learned plenty along the way.

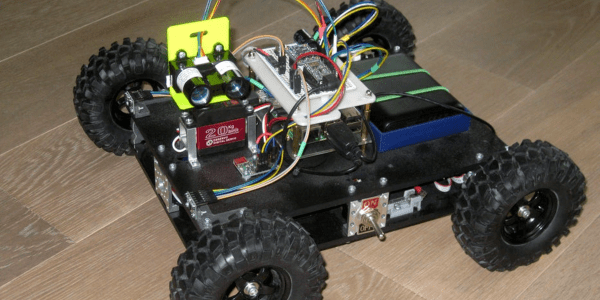

[Greg]’s aims with the project were to build a robot that was capable of navigating his home without external assistance. To do the job, a Raspberry Pi 3 was put in charge, and kitted out with a LIDAR for mapping. Pololu Roboclaw motor controllers are then used to allow the Raspberry Pi to drive the robot’s individual wheel motors, giving the four-wheeled bot skid steering capability.

[Greg] goes into immense detail on the project’s writeup, exploring the code and concepts behind its autonomous abilities. Creating a robot that can navigate using LIDAR is no easy task, but [Greg] does a great job of explaining how it all works, and why.

It’s not the first autonomous rover we’ve seen here, and we’re sure it won’t be the last. If you’ve got your own build coming together in the lab, be sure to let us know. Video after the break.

Continue reading “Autonomous Rover Navigates The House With LIDAR”