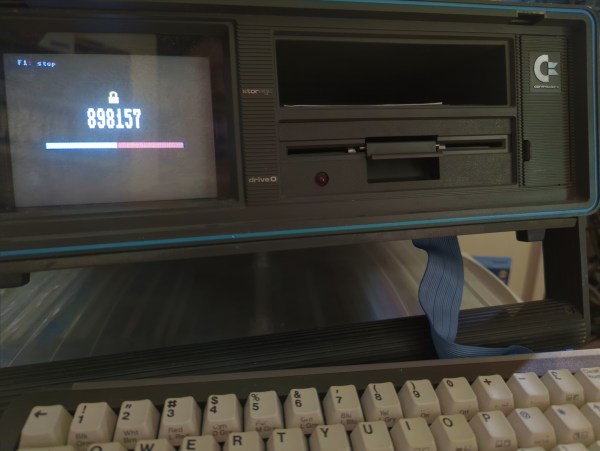

If you’ve used a corporate VPN or an online-banking system in the past fifteen years or so, chances are you’ve got a few of those little authenticator key fobs lying around, still displaying a new code every 30 seconds. Today such one-time codes are typically sent to you by text message or generated by a dedicated smartphone app, which is convenient but a bit boring. If you miss having a dedicated piece of hardware for your login codes, then we’ve got good news for you: [Cameron Kaiser] has managed to turn a Commodore SX-64 into a two-factor authenticator. Unlike a key fob that’s one gadget you’re not likely to lose, and any thief would probably need to spend quite some time figuring out how to operate it. Continue reading “Generating Two-Factor Authentication Codes With A Commodore 64”

Security Hacks1541 Articles

This Week In Security: Microsoft Patches, Typosquatting Continues, And Code Signing For All

The pair of Outlook vulnerabilities we’ve been tracking have finally been patched, along with another handful of fixes this Patch Tuesday, a total of six being 0-day exploits. The third vulnerability was also a 0-day, discovered by the Google Threat Analysis Group. This one resulted in arbitrary code execution when a Windows client connected to a malicious server.

A pair of escalation of privilege flaws were fixed, one being yet another print spooler issue, and the other part of a key handling service. The final zero-day fixed was a mark-of-the-web bypass, that being the tag that gets added to file metadata to indicate it’s a download from the internet. If you deliver malware inside an ISO or marked read-only in a zip file, it doesn’t show the warning when executing.

Will Typosquat For Bitcoin

A trend that doesn’t show signs of slowing down is Typosquatting, the simple malware distribution strategy of uploading tainted packages using misspelled variations of legitimate package names. The latest such scheme, discovered by researchers at Phylum, delivered a crypto-stealer in Python packages. These packages were hosted on PyPi, under names like baeutifulsoup4 and cryptograpyh. The packages install a JavaScript file that runs in the background of the browser, and monitors for a cryptocurrency address on the clipboard. When detected, the intended address is swapped for an attacker-controlled address. Continue reading “This Week In Security: Microsoft Patches, Typosquatting Continues, And Code Signing For All”

This Week In Security: OpenSSL Fizzle, Java XML, And Nothing As It Seems

The security world held our collective breaths early this week for the big OpenSSL vulnerability announcement. Turns out it’s two separate issues, both related to punycode handling, and they’ve been downgraded to high severity instead of critical. Punycode, by the way, is the system for using non-ASCII Unicode characters in domain names. The first vulnerability, CVE-2022-3602, is a buffer overflow that writes four arbitrary bytes to the stack. Notably, the vulnerable code is only run after a certificate’s chain is verified. A malicious certificate would need to be either properly signed by a Certificate Authority, or manually trusted without a valid signature.

A couple sources have worked out the details of this vulnerability. It’s an off-by-one error in a loop, where the buffer length is checked earlier in the loop than the length variable is incremented. Because of the logic slip, the loop can potentially run one too many times. That loop processes the Unicode characters, encoded at the end of the punycode string, and injects them in the proper place, sliding the rest of the string over a byte in memory as a result. If the total output length is 513 characters, that’s a single character overflow. A Unicode character takes up four bytes, so there’s your four-byte overflow. Continue reading “This Week In Security: OpenSSL Fizzle, Java XML, And Nothing As It Seems”

Make Your Pi Moonlight As A Security Camera

A decade ago, I was learning Linux through building projects for my own needs. One of the projects was a DIY CCTV system based on a Linux box – specifically, a user-friendly all-in-one package for someone willing to pay for it. I stumbled upon Zoneminder, and those in the know, already can tell what happened – I’ll put it this way, I spent days trying to make it work, and my Linux skills at the time were not nearly enough. Cool software like Motion was available back then, but I wasn’t up to the task of rolling an entire system around it. That said, it wouldn’t be impossible, now, would it?

Five years later, I joined a hackerspace, and eventually found out that its CCTV cameras, while being quite visually prominent, stopped functioning a long time ago. At that point, I was in a position to do something about it, and I built an entire CCTV network around a software package called MotionEye. There’s a lot of value in having working CCTV cameras at a hackerspace – not only does a functioning system solve the “who made the mess that nobody admits to” problem, over the years it also helped us with things like locating safety interlock keys to a lasercutter that were removed during a reorganization, with their temporary location promptly forgotten.

Five years later, I joined a hackerspace, and eventually found out that its CCTV cameras, while being quite visually prominent, stopped functioning a long time ago. At that point, I was in a position to do something about it, and I built an entire CCTV network around a software package called MotionEye. There’s a lot of value in having working CCTV cameras at a hackerspace – not only does a functioning system solve the “who made the mess that nobody admits to” problem, over the years it also helped us with things like locating safety interlock keys to a lasercutter that were removed during a reorganization, with their temporary location promptly forgotten.

Being able to use MotionEye to quickly create security cameras became quite handy very soon – when I needed it, I could make a simple camera to monitor my bicycle, verify that my neighbours didn’t forget to feed my pets as promised while I was away, and in a certain situation, I could even ensure mine and others’ physical safety with its help. How do you build a useful always-recording camera network in your house, hackerspace or other property? Here’s a simple and powerful software package I’d like to show you today, and it’s called MotionEye.

Continue reading “Make Your Pi Moonlight As A Security Camera”

An Easy-To-Make Pi-Powered Pocket Password Pal

Sometimes, we see a project where it’s clear – its creator seriously wants to make a project idea accessible to newcomers; and today’s project is one of these cases. The BYOPM – Bring Your Own Password Manager, a project by [novamostra] – is a Pi Zero-powered device to carry your passwords around in. This project takes the now well-explored USB gadget feature of the Pi Zero, integrates it into a Bitwarden-backed password management toolkit to make a local-network-connected password storage, and makes a tutorial simple enough that anybody can follow it to build their own.

For the physical part, assembly instructions are short and sweet – you only need to solder a single button to fulfill the hardware requirements, and there’s a thin 3D-printable case if you’d like to make the Pi Zero way more pocket-friendly, too! For the software part, the instructions walk you step-by-step through setting up an SD card with a Raspbian image, then installing all the tools and configuring a system with networking exposed over the USB gadget interface. From there, you set up a Bitwarden instance, and optionally learn to connect it to the corresponding browser extensions. Since the device’s goal is password management and storage, it also reminds you to do backups, pointing out specifically the files you’ll want to keep track of.

Overall, such a device helps you carry your passwords with you wherever you need them, you can build this even if your Raspberry Pi skills are minimal so far, and it’s guaranteed to provide you with a feeling that only a self-built pocket gadget with a clear purpose can give you! Looking for something less reliant on networking and more down-to-commandline? Here’s a buttons-and-screen-enabled Pi Zero gadget that uses pass.

This Week In Security: IOS, OpenSSL, And SQLite

Earlier this week, a new release of iOS rolled out, fixing a handful of security issues. One in particular noted it “may have been actively exploited”, and was reported anonymously. This usually means that a vulnerability was discovered in the wild, being used as part of an active campaign. The anonymous credit is interesting, too. An educated guess says that this was a rather targeted attack, and the security company that found it doesn’t want to give away too much information.

Of other interest is the GPU-related fix, credited to [Asahi Lina], the VTuber doing work on porting Linux to the Apple M1/M2 platform, and particularly focusing on GPU drivers. She’s an interesting case, and doing some very impressive work. There does remain the unanswered question of how the Linux Kernel will deal with a pull request coming from a pseudonym. Regardless, get your iOS devices updated.

Continue reading “This Week In Security: IOS, OpenSSL, And SQLite”

The WiFi Pumpkin Is The WiFi Pineapple We Have At Home

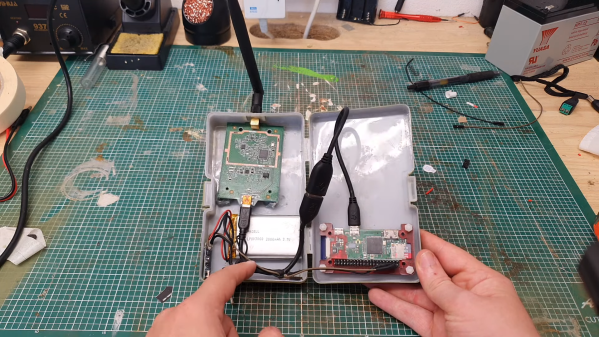

While networking was once all about the Cat 5 cables and hubs and routers, now most of us connect regularly in a wireless manner. Just like regular networks, wireless networks need auditing, and [Brains933] decided to whip up a tool for just that, nicknaming it the PumpkinPI_3.

The build is inspired by the WiFi Pineapple, which is a popular commercial pentesting tool. It runs the WiFi Pumpkin framework which allows the user to run a variety of attacks on a given wireless network. Among other features, it can act as a rogue access point, run man-in-the-middle attacks, and even spoof Windows updates if so desired.

In this case, [Brains933] grabbed a Raspberry Pi Zero W to run the framework. It was stuffed in a case with a Alfa Network AWUS036NHA wireless card due to its ability to run in monitoring mode — a capability required by some of the more advanced tools. It runs on a rechargeable LiPo battery for portability, and can be fitted with a small screen for ease of operation.

It should prove to be a useful tool for investigating wireless security on the go. Alternatively, you can go even leaner, running attacks off an ESP32.

Continue reading “The WiFi Pumpkin Is The WiFi Pineapple We Have At Home”