Often neglected as ‘merely a styling language’, CSS contains a wealth of functions built right into the browser’s rendering engine that can perform everything from animations to typography and even mathematical operations, with more added each year.

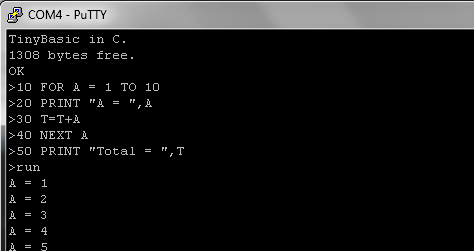

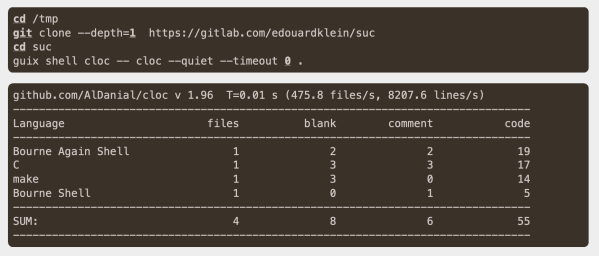

In a tutorial [Bramus] takes us through using the trigonometric functions in CSS. These are supported in all major browsers since Chrome 111, Firefox 108 and Safari 15.4. In addition to these trigonometric functions, further mathematical functions are also available, many of whom have been available for years now, such as calc(), min() and max().

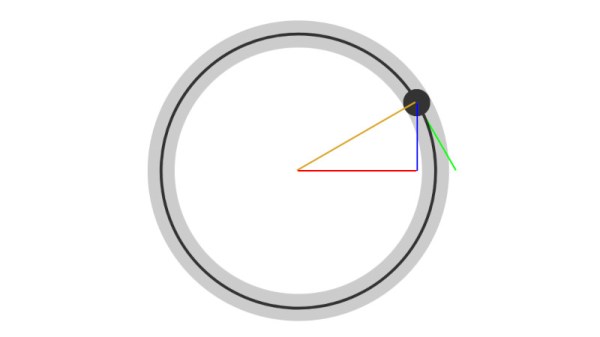

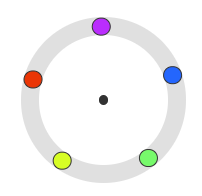

Unlike the JavaScript version of the CSS trigonometric functions, the CSS functions accept both angles and radians for the argument. Perhaps the nicest thing about having this functionality in CSS is that it removes the need to add JavaScript for many simple things on a webpage, such as animations, translations and the calculating of offsets and positions. Perhaps most impressive is the provided example by [Ana Tudor] who created an animated Möbius strip using cos() and sin() and a handful of other CSS functions.

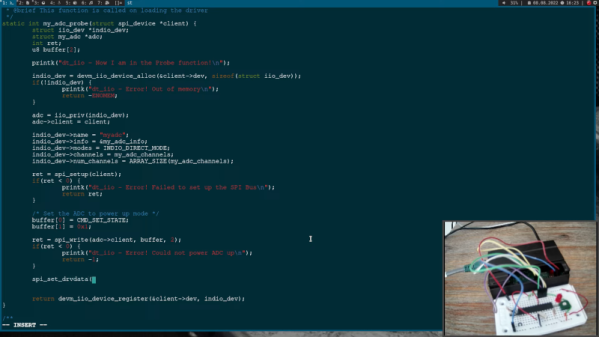

None of this is likely surprising to anyone who is somewhat familiar with the depths of CSS, especially after it has been more-or-less proven to be a Turing-complete programming language. Using this power for visual elements does however make a lot of sense considering that CSS was always intended to help with styling and formatting the raw HTML.

Do you use these advanced CSS features already, or is it something you might consider using in the future, possibly over JavaScript versions? Feel free to share your thoughts and experiences in the comments.

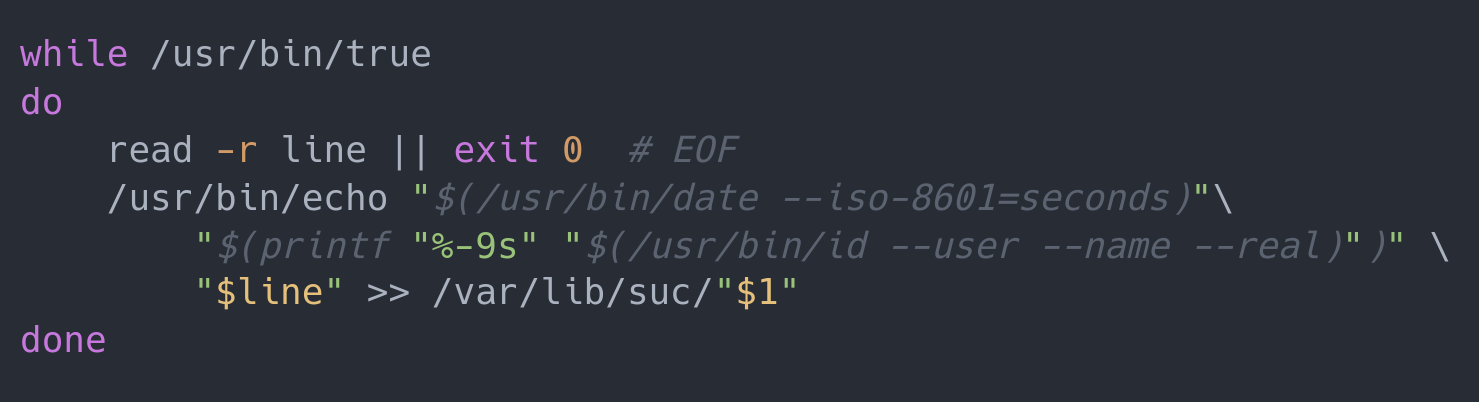

(Heading: Code to move items on a circular path around a central point in CSS.)