Recently, [Jabrils] set out to accomplish a difficult task: porting a quantum-inspired algorithm to run on a (simulated) quantum computer. Algorithms are often inspired by all sorts of natural phenomena. For example, a solution to the traveling salesman problem models ants and their pheromone trails. Another famous example is neural nets, which are inspired by the neurons in your brain. However, attempting to run a machine learning algorithm on your neurons, even with the assistance of pen and paper would be a nearly impossible exercise.

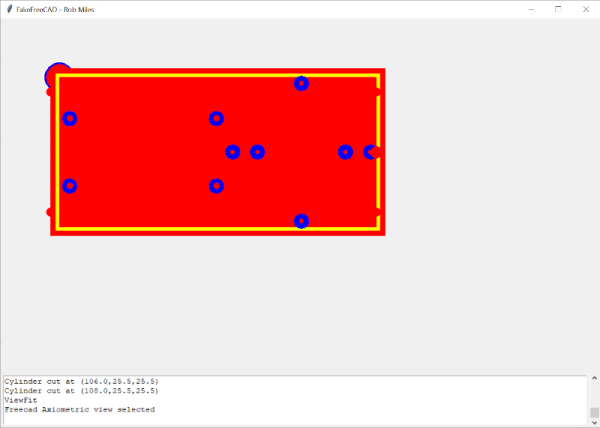

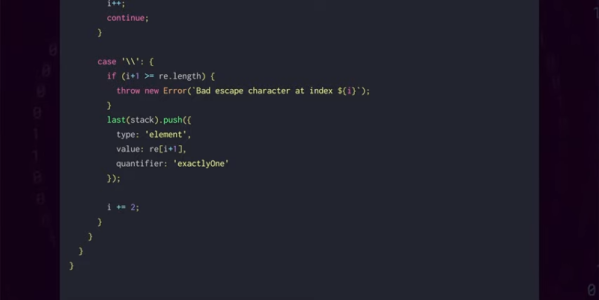

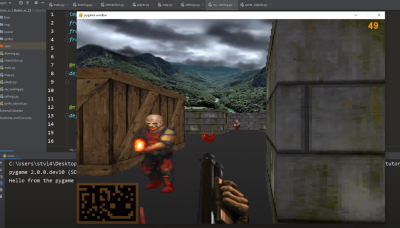

The quantum-inspired algorithm in question is known as the wavefunction collapse function. In a nutshell, you have a cube of voxels, a graph of nodes, or simply a grid of tiles as well as a list of detailed rules to determine the state of a node or tile. At the start of the algorithm, each node or point is considered in a state of superposition, which means it is considered to be in every possible state. Looking at the list of rules, the algorithm then begins to collapse the states. Unlike a quantum computer, states of superposition is not an intrinsic part of a classic computer, so this solving must be done iteratively. In order to reduce possible conflicts and contradictions later down the line, the nodes with the least entropy (the smallest number of possible states) are solved first. At first, random states are assigned, with the changes propagating through the system. This process is continued until the waveform is ultimately collapsed to a stable state or a contradiction is reached.

What’s interesting is that the ruleset doesn’t need to be coded, it can be inferred from an example. A classic use case of this algorithm is 2D pixel-art level design. By providing a small sample level, the algorithm churns and produces similar but wholly unique output. This makes it easy to provide thousands of unique and beautiful levels from an easy source image, however it comes at a price. Even a small level can take hours to fully collapse. In theory, a quantum computer should be able to do this much faster, since after all, it was the inspiration for this algorithm in the first place.

[Jabrils] spent weeks trying to get things running but ultimately didn’t succeed. However, his efforts give us a peek into the world of quantum computing and this amazing algorithm. We look forward to hearing more about this project from [Jabrils] who is continuing to work on it in his spare time. Maybe give it a shot yourself by learning the basics of quantum computing for yourself.

Continue reading “Quantum Inspired Algorithm Going Back To The Source” →