When it comes to robotics, some of the most interesting work — and certainly the most hilarious — has come from Boston Dynamics, and their team of interns kicking robotic dogs over. It’s an impressive feat of engineering, and even if these robotic pack mules are far too loud for their intended use on the battlefield, it’s a great showcase of how cool a bunch of motors can actually be.

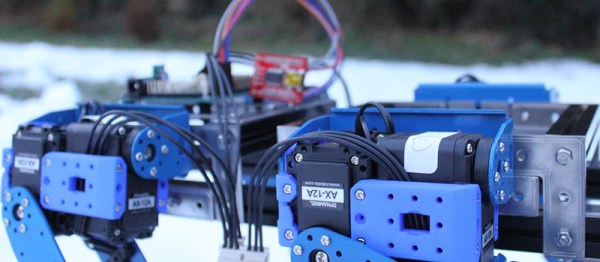

It’s not quite up there with the Boston Dynamics robots, but [Dimitris]’ project for the Hackaday Prize is an almost equally impressive assemblage of motors, 3D printed parts, SLAM processing and inverse kinematics. I suppose you could also kick it over and watch it struggle for laughs, too.

This robotic dog was first modeled in Fusion 360, and was designed with 22 Dynamixel AX-12A robot actuators: big, beefy, serial-controllable servos. Of course, bolting a bunch of motors to a frame is the easy part. The real challenge here is figuring out the kinematics and teaching this robot dog how to walk. This is still a work in progress, but so far [Dimitris] is able to move the spine, keep the feet level with the ground, and have the robot walk a little bit. There’s still work to do, but there’s an incredible amount of work that’s already been done.

The upcoming features for this robot include a RealSense camera mounted on the head for 3D visualization of the surroundings. There’s also plans for a tail, loosely based on some of the tentacle robots we’ve seen. It’s going to be a great project when it’s done, and it’s already an excellent entry for the Hackaday Prize.