You don’t always need much to build an FPV rig – especially if you’re willing to take advantage of the power of modern smartphones. [joe57005] is showing off his VR FPV build – a fully-printable small Mechanum wheels car chassis, equipped with an ESP32-CAM board serving a 720×720 stream through WiFi. The car uses regular 9g servos to drive each wheel, giving you omnidirectional movement wherever you want to go. An ESP32 CPU and a single low-res camera might not sound like much if you’re aiming for a VR view, and all the ESP32 does is stream the video feed over WebSockets – however, the simplicity is well-compensated for on the frontend. Continue reading “2022 FPV Contest: ESP32-Powered FPV Car Uses Javascript For VR Magic”

Virtual Reality252 Articles

VR Sickness: A New, Old Problem

Have you ever experienced dizziness, vertigo, or nausea while in a virtual reality experience? That’s VR sickness, and it’s a form of motion sickness. It is not a completely solved problem, and it affects people differently, but it all comes from the same root cause, and there are better and worse ways of dealing with it.

If you’ve experienced a sudden onset of VR sickness, it was most likely triggered by flying, sliding, or some other kind of movement in VR that caused a strong and sudden feeling of vertigo or dizziness. Or perhaps it was not sudden, and was more like a vague unease that crept up, leaving you nauseated and unwell.

Just like car sickness or sea sickness, people are differently sensitive. But the reason it happens is not a mystery; it all comes down to how the human body interprets and reacts to a particular kind of sensory mismatch.

Why Does It Happen?

The human body’s vestibular system is responsible for our sense of balance. It is in turn responsible for many boring, but important, tasks such as not falling over. To fulfill this responsibility, the brain interprets a mix of sensory information and uses it to build a sense of the body, its movements, and how it fits in to the world around it.

These sensory inputs come from the inner ear, the body, and the eyes. Usually these inputs are in agreement, or they disagree so politely that the brain can confidently make a ruling and carry on without bothering anyone. But what if there is a nontrivial conflict between those inputs, and the brain cannot make sense of whether it is moving or not? For example, if the eyes say the body is moving, but the joints and muscles and inner ear disagree? The result of that kind of conflict is to feel sick.

Common symptoms are dizziness, nausea, sweating, headache, and vomiting. These messy symptoms are purposeful, for the human body’s response to this particular kind of sensory mismatch is to assume it has ingested something poisonous, and go into a failure mode of “throw up, go lie down”. This is what is happening — to a greater or lesser degree — by those experiencing VR sickness.

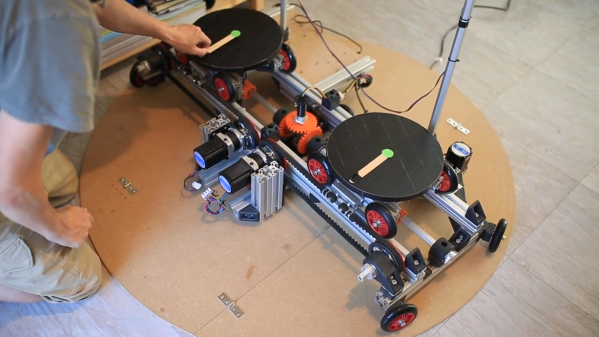

DIY Robotic Platform Aims To Solve Walking In VR

[Mark Dufour]’s TACO VR project is a sort of robotic platform that mimics an omnidirectional treadmill, and aims to provide a compact and easily transportable way to allow a user to walk naturally in VR.

Unenthusiastic about most solutions for allowing a user to walk in VR, [Mark] took a completely different approach. The result is a robotic platform that fits inside a small area whose sides fold up for transport; when packed up, it resembles a taco. When deployed, the idea is to have two disc-like platforms always stay under a user’s feet, keeping the user in one place while they otherwise walk normally.

Unenthusiastic about most solutions for allowing a user to walk in VR, [Mark] took a completely different approach. The result is a robotic platform that fits inside a small area whose sides fold up for transport; when packed up, it resembles a taco. When deployed, the idea is to have two disc-like platforms always stay under a user’s feet, keeping the user in one place while they otherwise walk normally.

It’s an ambitious project, but [Mark] is up to the task and the project’s GitHub respository has everything needed to stay up to date, or get involved yourself. The hardware is mainly focused on functionality right now; certainly a fall or stumble while using the prototype looks like it would be uncomfortable at the very best, but the idea is innovative. Continue reading “DIY Robotic Platform Aims To Solve Walking In VR”

Simulating Temperature In VR Apps With Trigeminal Nerve Stimulation

Virtual reality systems are getting better and better all the time, but they remain largely ocular and auditory devices, with perhaps a little haptic feedback added in for good measure. That still leaves 40% of the five canonical senses out of the mix, unless of course this trigeminal nerve-stimulating VR accessory catches on.

While you may be tempted to look at this as a simple “Smellovision”-style olfactory feedback, the work by [Jas Brooks], [Steven Nagels], and [Pedro Lopes] at the University of Chicago’s Human-Computer Integration Lab is intended to provide a simulation of different thermal regimes that a VR user might experience in a simulation. True, the addition to an off-the-shelf Vive headset does waft chemicals into the wearer’s nose using three microfluidics pumps with vibrating mesh atomizers, but it’s the choice of chemicals and their target that makes this work. The stimulants used are odorless, so instead of triggering the olfactory bulb in the nose, they target the trigeminal nerve, which also innervates the lining of the nose and causes more systemic sensations, like the generalized hot feeling of chili peppers and the cooling power of mint. The headset leverages these sensations to change the thermal regime in a simulation.

The video below shows the custom simulation developed for this experiment. In addition to capsaicin’s heat and eucalyptol’s cooling, the team added a third channel with 8-mercapto-p-menthan-3-one, an organic compound that’s intended to simulate the smoke from a generator that gets started in-game. The paper goes into great detail on the various receptors that can be stimulated and the different concoctions needed, and full build information is available in the GitHub repo. We’ll be watching this one with interest.

Continue reading “Simulating Temperature In VR Apps With Trigeminal Nerve Stimulation”

2022 Cyberdeck Contest: Cyberpack VR

Feeling confined by the “traditional” cyberdeck form factor, [adam] decided to build something a little bigger with his Cyberpack VR. If you’ve ever dreamed of being a WiFi-equipped porcupine, then this is the cyberdeck you’ve been waiting for.

Craving the upgradability and utility of a desktop in a more portable format, [adam] took an old commuter backpack and squeezed in a Windows 11 PC, Raspberry Pi, multiple wifi networks, an ergonomic keyboard, a Quest VR headset, and enough antennas to attract the attention of the FCC. The abundance of network hardware is due to [adam]’s “new interest: a deeper understanding of wifi, and control of my own home network even if my teenage kids become hackers.”

The Quest is setup to run multiple virtual displays via Immersed, and you can relax on the couch while leaving the bag on the floor nearby with the extra long umbilical. One of the neat details of this build is repurposing the bag’s external helmet mount to attach the terminal unit when not in use. Other details we love are the toggle switches and really integrated look of the antenna connectors and USB ports. The way these elements are integrated into the bag makes it feel borderline organic – all the better for your cyborg chic.

For more WiFi backpacking goodness you may be interested in the Pwnton Pack. We’ve also covered other non-traditional cyberdecks including the Steampunk Cyberdeck and the Galdeano. If you have your own cyberdeck, you have until September 30th to submit it to our 2022 Cyberdeck Contest!

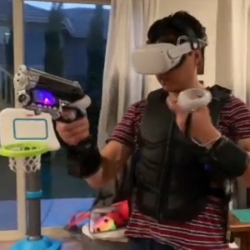

DIY Haptic-Enabled VR Gun Hits All The Targets

This VR Haptic Gun by [Robert Enriquez] is the result of hacking together different off-the-shelf products and tying it all together with an ESP32 development board. The result? A gun frame that integrates a VR controller (meaning it can be tracked and used in VR) and provides mild force feedback thanks to a motor that moves with each shot.

But that’s not all! Using the WiFi capabilities of the ESP32 board, the gun also responds to signals sent by a piece of software intended to drive commercial haptics hardware. That software hooks into the VR game and sends signals over the network telling the gun what’s happening, and [Robert]’s firmware acts on those signals. In short, every time [Robert] fires the gun in VR, the one in his hand recoils in synchronization with the game events. The effect is mild, but when it comes to tactile feedback, a little can go a long way.

But that’s not all! Using the WiFi capabilities of the ESP32 board, the gun also responds to signals sent by a piece of software intended to drive commercial haptics hardware. That software hooks into the VR game and sends signals over the network telling the gun what’s happening, and [Robert]’s firmware acts on those signals. In short, every time [Robert] fires the gun in VR, the one in his hand recoils in synchronization with the game events. The effect is mild, but when it comes to tactile feedback, a little can go a long way.

The fact that this kind of experimentation is easily and affordably within the reach of hobbyists is wonderful, and VR certainly has plenty of room for amateurs to break new ground, as we’ve seen with projects like low-cost haptic VR gloves.

[Robert] walks through every phase of his gun’s design, explaining how he made various square pegs fit into round holes, and provides links to parts and resources in the project’s GitHub repository. There’s a video tour embedded below the page break, but if you want to jump straight to a demonstration in Valve’s Half-Life: Alyx, here’s a link to test firing at 10:19 in.

There are a number of improvements waiting to be done, but [Robert] definitely understands the value of getting something working, even if it’s a bit rough. After all, nothing fills out a to-do list or surfaces hidden problems like a prototype. Watch everything in detail in the video tour, embedded below.

Continue reading “DIY Haptic-Enabled VR Gun Hits All The Targets”

Svelte VR Headsets Coming?

According to Standford and NVidia researchers, VR adoption is slowed by the bulky headsets required. They want to offer a slim solution. A SIGGRAPH paper earlier this year lays out their plan or you can watch the video below. There’s also a second video, also below, covers some technical questions and answers.

The traditional headset has a display right in front of your eyes. Special lenses can make them skinnier, but this new method provides displays that can be a few millimeters thick. The technology seems pretty intense and appears to create a hologram at different apparent places using a laser, a geometric phase lens, and a pupil-replicating waveguide.