Why is it always a helium leak? It seems whenever there’s a scrubbed launch or a narrowly averted disaster, space exploration just can’t get past the problems of helium plumbing. We’ve had a bunch of helium problems lately, most famously with the leaks in Starliner’s thruster system that have prevented astronauts Butch Wilmore and Suni Williams from returning to Earth in the spacecraft, leaving them on an extended mission to the ISS. Ironically, the launch itself was troubled by a helium leak before the rocket ever left the ground. More recently, the Polaris Dawn mission, which is supposed to feature the first spacewalk by a private crew, was scrubbed by SpaceX due to a helium leak on the launch tower. And to round out the helium woes, we now have news that the Peregrine mission, which was supposed to carry the first commercial lander to the lunar surface but instead ended up burning up in the atmosphere and crashing into the Pacific, failed due to — you guessed it — a helium leak.

Continue reading “Hackaday Links: September 1, 2024”

azure6 Articles

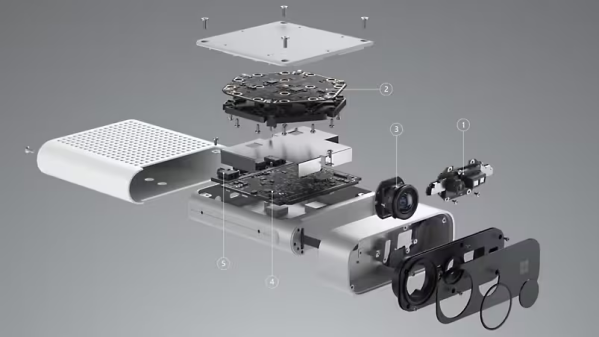

Microsoft Discontinues Kinect, Again

The Kinect is a depth-sensing camera peripheral originally designed as a accessory for the Xbox gaming console, and it quickly found its way into hobbyist and research projects. After a second version, Microsoft abandoned the idea of using it as a motion sensor for gaming and it was discontinued. The technology did however end up evolving as a sensor into what eventually became the Azure Kinect DK (spelling out ‘developer kit’ presumably made the name too long.) Sadly, it also has now been discontinued.

The original Kinect was a pretty neat piece of hardware for the price, and a few years ago we noted that the newest version was considerably smaller and more capable. It had a depth sensor with selectable field of view for different applications, a high-resolution RGB video camera that integrated with the depth stream, integrated IMU and microphone array, and it worked to leverage machine learning for better processing and easy integration with Azure. It even provided a simple way to sync multiple units together for unified processing of a scene.

The original Kinect was a pretty neat piece of hardware for the price, and a few years ago we noted that the newest version was considerably smaller and more capable. It had a depth sensor with selectable field of view for different applications, a high-resolution RGB video camera that integrated with the depth stream, integrated IMU and microphone array, and it worked to leverage machine learning for better processing and easy integration with Azure. It even provided a simple way to sync multiple units together for unified processing of a scene.

In many ways the Kinect gave us all a glimpse of the future because at the time, a depth-sensing camera with a synchronized video stream was just not a normal thing to get one’s hands on. It was also one of the first consumer hardware items to contain a microphone array, which allowed it to better record voices, localize them, and isolate them from other noise sources in a room. It led to many, many projects and we hope there are still more to come, because Microsoft might not be making them anymore, but they are licensing out the technology to companies who want to build similar devices.

Train All The Things Contest Update

Back in January when we announced the Train All the Things contest, we weren’t sure what kind of entries we’d see. Machine learning is a huge and rapidly evolving field, after all, and the traditional barriers that computationally intensive processes face have been falling just as rapidly. Constraints are fading away, and we want you to explore this wild new world and show us what you come up with.

Where Do You Run Your Algorithms?

To give your effort a little structure, we’ve come up with four broad categories:

- Machine Learning on the Edge

- Edge computing, where systems reach out to cloud resources but run locally, is all the rage. It allows you to leverage the power of

other people’s computersthe cloud for training a model, which is then executed locally. Edge computing is a great way to keep your data local.

- Edge computing, where systems reach out to cloud resources but run locally, is all the rage. It allows you to leverage the power of

- Machine Learning on the Gateway

- Pi’s, old routers, what-have-yous – we’ve all got a bunch of devices laying around that bridge space between your local world and the cloud. What can you come up with that takes advantage of this unique computing environment?

- Machine Learning in the Cloud

- Forget about subtle — this category unleashes the power of the cloud for your application. Whether it’s Google, Azure, or AWS, show us what you can do with all that raw horsepower at your disposal.

- Artificial Intelligence Blinky

- Everyone’s “hardware ‘Hello, world'” is blinking an LED, and this is the machine learning version of that. We want you to use a simple microprocessor to run a machine learning algorithm. Amaze us with what you can make an Arduino do.

These Hackers Trained Their Projects, You Should Too!

We’re a little more than a month into the contest. We’ve seen some interesting entries bit of course we’re hungry for more! Here are a few that have caught our eye so far:

- Intelligent Bat Detector – [Tegwyn☠Twmffat] has bats in his… backyard, so he built this Jetson Nano-powered device to capture their calls and classify them by species. It’s a fascinating adventure at the intersection of biology and machine learning.

- Blackjack Robot – RAIN MAN 2.0 is [Evan Juras]’ cure for the casino adage of “The house always wins.” We wouldn’t try taking the Raspberry Pi card counter to Vegas, but it’s a great example of what YOLO can do.

- AI-enabled Glasses – AI meets AR in ShAIdes, [Nick Bild]’s sunglasses equipped with a camera and Nano to provide a user interface to the world. Wave your hand over a lamp and it turns off. Brilliant!

You’ve got till noon Pacific time on April 7, 2020 to get your entry in, and four winners from each of the four categories will be awarded a $100 Tindie gift card, courtesy of our sponsor Digi-Key. It’s time to ramp up your machine learning efforts and get a project entered! We’d love to see more examples of straight cloud AI applications, and the AI blinky category remains wide open at this point. Get in there and give machine learning a try!

New Kinect Sensor Switch Focus From Gamers To Developers

Microsoft’s Kinect may not have found success as a gaming peripheral, but recognizing that a depth sensor is too cool to leave for dead, development continued even after Xbox gaming peripherals were discontinued. This week their latest iteration emerged and we can get it in the form of Azure Kinect DK. This is a developer’s kit focused on exploring new applications for this technology, not a gaming peripheral we had to hack before we could use in our own projects.

Packaged into a peripheral that plugs into a PC via USB-C, it is more than the core depth sensor module announced last year but less than a full consumer product. Browsing its 10-page specification (PDF) with comparisons to second generation Kinect sensor bar, we see how this technology has evolved. Physical size and weight has dropped, as has power consumption. Auxiliary capabilities has improved with an expanded microphone array, IMU with gyro in addition to accelerometer, and the RGB camera has been upgraded to 4K resolution.

But the star of the show is a new continuous-wave time-of-flight depth sensor, presented at the 2018 IEEE ISSCC conference. (Full text requires IEEE membership, but a digest form is available via ResearchGate.) Among its many advancements, we expect the biggest impact to be its field of view. Default of 75 x 65 degrees is already better than its predecessors (64 x 45 for first generation Kinect, 70 x 60 for second) but there is an option to trade resolution for coverage by switching to a wide-angle mode of 120 x 120 degrees. Significantly wider than other depth cameras like Intel’s RealSense D400 series or Occipital’s Structure.

Another interesting feature is built-in synchronization. Many projects using multiple Kinect sensors ran into problems because they interfered with each other. People hacked around the problem, of course, but now they don’t have to: commodity 3.5 mm jacks allow multiple Azure Kinect DK to be daisy chained together so they play nicely and take turns.

From its name we were worried this product would require Microsoft’s Azure cloud service in some way and be crippled without it. Based on information released so far, it appears developers have access to all the same data streams as previous sensors. Azure tie-in takes the form of optional SDKs that make it easier to do things like upload data for processing in Azure cloud-based recognition services.

And finally, Azure Kinect DK’s price tag of $399 is significantly higher than a Kinect game peripheral, but it is a low volume product for developers. Perhaps high volume consumer products built on this technology will cost less, but that remains to be seen. In the meantime, you have alternative tools for solving similar problems. For example if you are building your own AR headset, you might use Intel’s latest RealSense camera for vision based inside-out motion tacking.

Microsoft Kinect Episode IV: A New Hope

The history of Microsoft Kinect has been of a technological marvel in search of the perfect market niche. Coming out of Microsoft’s Build 2018 developer conference, we learn Kinect is making another run. This time it’s taking on the Internet of Things mantle as Project Kinect for Azure.

Kinect was revolutionary in making a quality depth camera system available at a consumer price point. The first and second generation Kinect were peripherals for Microsoft’s Xbox gaming consoles. They wowed the world with possibilities and, thanks in large part to an open source driver bounty spearheaded by Adafruit, Kinect found an appreciative audience in robotics, interactive art, and other hacking communities. Sadly its novelty never translated to great success in its core gaming market and Kinect as a gaming peripheral was eventually discontinued.

For its third-generation, Kinect retreated from gaming and found a role in Microsoft’s HoloLens AR headset running “backwards”: tracking user’s environment instead of user’s movement. The high cost of a HoloLens put it out of reach of most people, but as a head-mounted battery-powered device, it pushed Kinect technology to shrink in physical size and power consumption.

This upcoming fourth generation takes advantage of that evolution and the launch picture is worth a thousand words all on its own: instead of a slick end-user commercial product, we see a populated PCB awaiting integration. The quoted power draw of 225-950mW is high by modern battery-powered device standards but undeniably a huge reduction from previous generations’ household AC power requirement.

Microsoft’s announcement heavily emphasized how this module will work with their cloud services, but we hope it can be persuaded to run independently from Microsoft’s cloud just as its predecessors could run independent of game consoles. This will be a big factor for adoption by our community, second only to the obvious consideration of price.

[via Engadget]

Make Any PC A Thousand Dollar Gaming Rig With Cloud Gaming

The best gaming platform is a cloud server with a $4,000 dollar graphics card you can rent when you need it.

[Larry] has done this sort of thing before with Amazon’s EC2, but recently Microsoft has been offering a beta access to some of NVIDIA’s Tesla M60 graphics cards. As long as you have a fairly beefy connection that can support 30 Mbps of streaming data, you can play just about any imaginable game at 60fps on the ultimate settings.

It takes a bit of configuration magic and quite a few different utilities to get it all going, but in the end [Larry] is able to play Overwatch on max settings at a nice 60fps for $1.56 an hour. Considering that just buying the graphics card alone will set you back 2500 hours of play time, for the casual gamer, this is a great deal.

It’s interesting to see computers start to become a rentable resource. People have been attempting streaming computers for a while now, but this one is seriously impressive. With such a powerful graphics card you could use this for anything intensive, need a super high-powered video editing station for a day or two? A CAD station to make anyone jealous? Just pay a few dollars of cloud time and get to it!