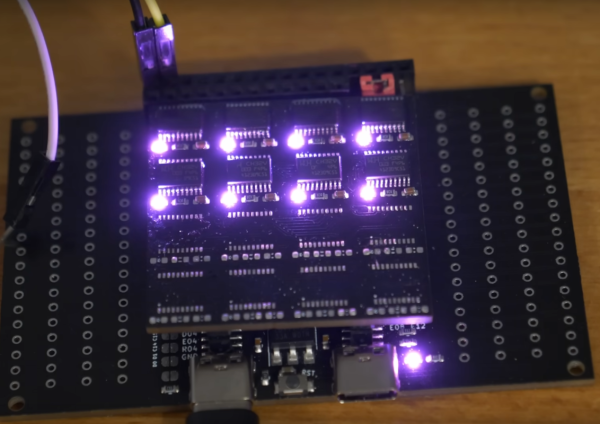

Raspberry Pi clusters have been a favorite project of homelabbers and distributed computing enthusiasts since the platform first launched over a decade ago, and for good reason. For an extremely low price this hardware makes it possible to experiment with parallel computing — something that otherwise isn’t easily accessible without lots of time, money, and hardware. This is even more true with the compute modules, as their size and cost makes some staggering builds possible like this cluster sporting 112 GB of RAM.

The project is based on the NanoCluster, a board that can hold seven compute modules in a form factor which, as [Christian] describes it, is about the size of a coffee mug. That means not only does it have a fairly staggering amount of RAM but also 28 processor cores to work with. Putting the hardware together is the easy part, though; [Christian] wanted to find the absolute easiest way of managing a system like this and decided on gitops, which is a method of maintaining a server where the desired system state is stored in Git, and automation continuously ensures the running environment on the hardware matches what’s in the repository.

For this cluster, it means that the nodes themselves can be swapped in and out, with new nodes automatically receiving instructions and then configuring themselves automatically. Updates and changes made on Git are pushed to the nodes automatically as well and there’s not much that needs to be done manually at all. In much the same way that immutable Linux distributions move all of the hassle of administering a system to something like a config file, tools like gitops do the same for servers and clusters like this, and it’s worth checking out [Christian]’s project to get an idea of just how straightforward it can be now.