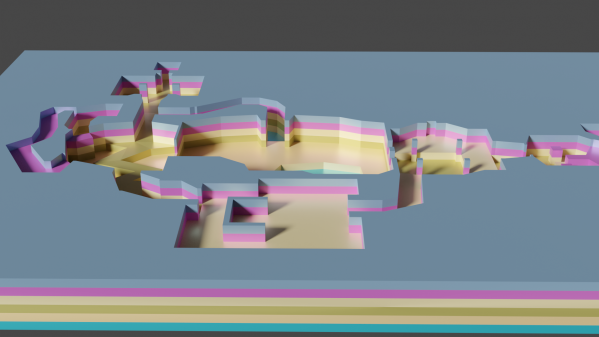

From around 2012 onwards, there was a 3D viewing and VR renaissance in the entertainment industry. That hardware has grown in popularity, even if it’s not yet mainstream. However, 3D tech goes back much further, as [Nicole] shows us with a look at Sega’s ancient 8-bit 3D glasses [via Adafruit].

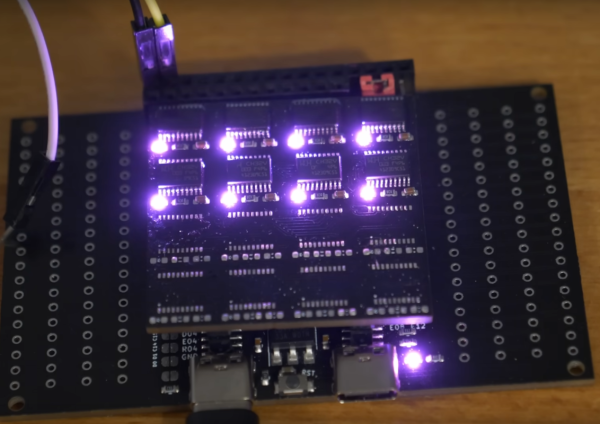

[Nicole]’s pair of Sega shutter glasses are battered and bruised, but she notes more modern versions are available using the same basic idea. The technology is based on liquid-crystal shutters, one for each eye. By showing the left and right eyes different images, it’s possible to create a 3D-vision effect even with very limited display hardware.

The glasses can be plugged directly into a Japanese Sega Master System, which hails from the mid-1980s. It sends out AC signals to trigger the liquid-crystal shutters via a humble 3.5mm TRS jack. Games like Space Harrier 3D, which were written to use the glasses, effectively run at a half-speed refresh rate. This is because of the 60 Hz NTSC or 50 Hz PAL screen refresh rate is split in half to serve each eye. Unfortunately, though, the glasses don’t work on modern LCD screens, as their inherent display lag throws off the timing of the pulses the console sends to the glasses.

It’s a neat look at an ancient bit of display tech that had a small resurgence with 3DTVs in the 2010s. By and large, it seems like humans just aren’t that into 3D, at least beneath a full-VR experience. Meanwhile, if you’re wondering what 8-bit 3D looked like, we’ve got a 3D video (!) after the break.