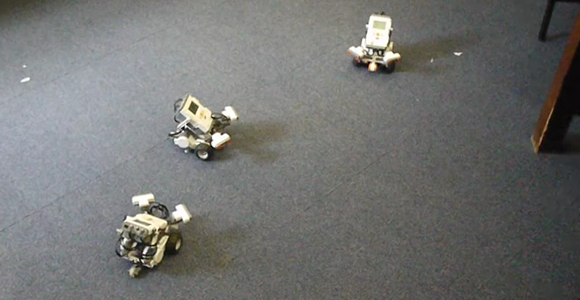

Somewhere down the road, you’ll find that your almighty autonomous robot chassis is going to need some sensor feedback. Otherwise, that next small step down the road may end with a blind leap off the coffee table. The first low-cost sensors we might throw at this problem would be sonars or IR rangefinders, but there’s a problem: those sensors only really provide distance data back from the pinpoint view directly ahead of them.

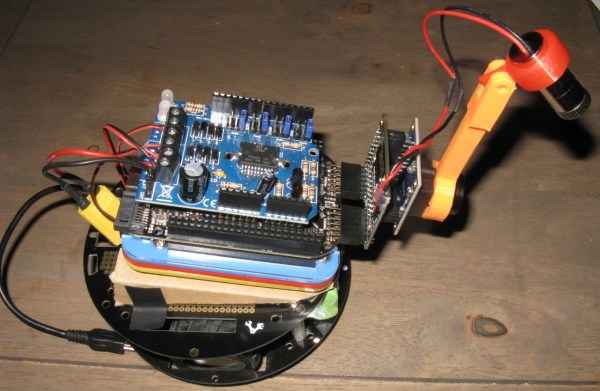

Rest assured, [Jonathan] wrote in to let us know that he’s got you covered. Combining a line laser, camera, and an FPGA, he’s able to detect obstacles that fall within the field of view of the camera and laser.

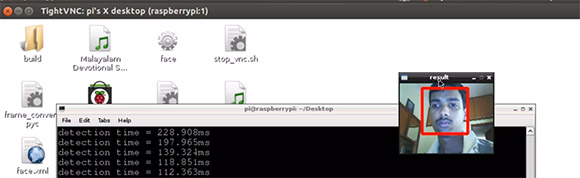

If you thought writing algorithms in software is tricky, wait till to you try hardware! (We know: division sucks!) [Jonathan] knows no fear though; he’s performing gradient computation on the FPGA directly to detect the laser in the camera image at a wicked 30 frames-per-second. Why roll up your sleeves and take the hardware route, you might ask? If we took a CPU-based approach at the tiny embedded-robot scale, Jonathan estimates a mere 10 frames-per-second. With an FPGA, we’re able to process images about as fast as they’re received.

Jonathan is using the Logi Board, a Kickstarter success we’ve visited in the past, and all of his code is up on the Githubs. If you crack it open, you’ll also find that many of his modules are Wishbone compliant, so developing your own projects with just some of these parts has been made much easier than trying to rip out useful features from a sea of hairy logic.

With computer-vision hardware keeping such a low profile in the hobbyist community, we’re excited to hear more about [Jonathan’s] FPGA-based robotics endeavors.

Continue reading “Robot Vision: Detecting Obstacles With FPGAs And Line Lasers”