[Andrej Karpathy] recently released llm.c, a project that focuses on LLM training in pure C, once again showing that working with these tools isn’t necessarily reliant on sprawling development environments. GPT-2 may be older but is perfectly relevant, being the granddaddy of modern LLMs (large language models) with a clear heritage to more modern offerings.

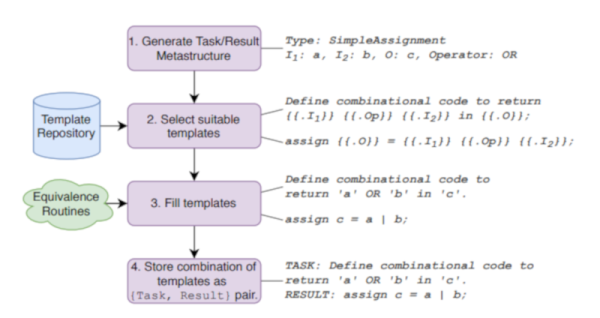

LLMs are fantastically good at communicating despite not actually knowing what they are saying, and training them usually relies on PyTorch deep learning library, itself written in Python. llm.c takes a simpler approach by implementing the neural network training algorithm for GPT-2 directly. The result is highly focused and surprisingly short: about a thousand lines of C in a single file. It is a highly elegant process that does the same thing the bigger, clunkier methods accomplish. It can run entirely on a CPU, or it can take advantage of GPU acceleration, where available.

This isn’t the first time [Andrej Karpathy] has bent his considerable skills and understanding towards boiling down these sorts of concepts into bare-bones implementations. We previously covered a project of his that is the “hello world” of GPT, a tiny model that predicts the next bit in a given sequence and offers low-level insight into just how GPT (generative pre-trained transformer) models work.