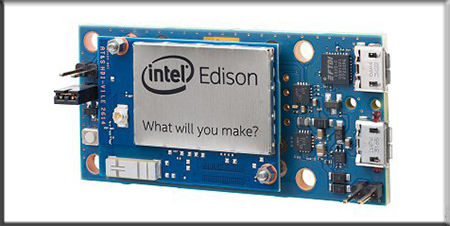

Announced at the beginning of this year, Intel’s Edison is the chipmakers latest foray into the world of low power, high performance computing. Originally envisioned to be an x86 computer stuffed into an SD card form factor, this tiny platform for wearables, consumer electronic designers, and the Internet of Things has apparently been redesigned a few times over the last few months. Now, Intel has finally unleashed it to the world. It’s still tiny, it’s still based on the x86 architecture, and it’s turning out to be a very interesting platform.

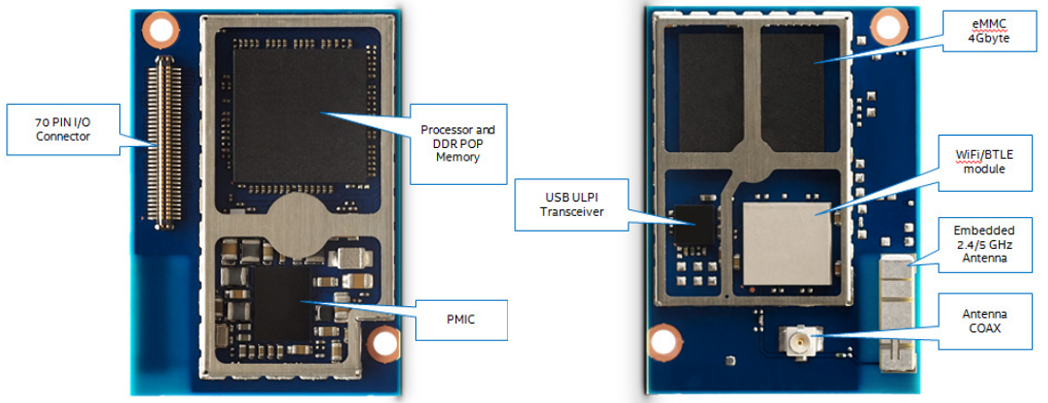

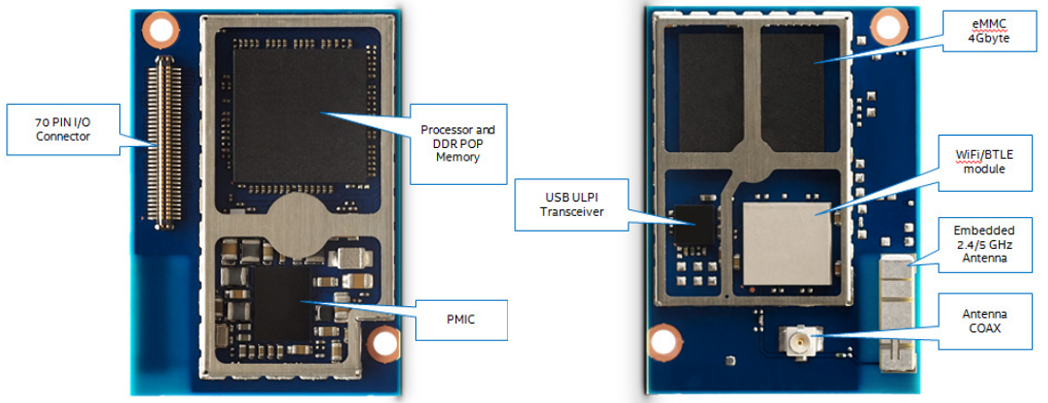

The key feature of the Edison is, of course, the Intel CPU. It’s a 22nm SoC with dual cores running at 500 MHz. Unlike so many other IoT and micro-sized devices out there, the chip in this device, an Atom Z34XX, has an x86 architecture. Also on board is 4GB of eMMC Flash and 1 GB of DDR3. Also included in this tiny module is an Intel Quark microcontroller – the same as found in the Intel Galileo – running at 100 MHz. The best part? Edison will retail for about $50. That’s a dual core x86 platform in a tiny footprint for just a few bucks more than a Raspberry Pi.

When the Intel Edison was first announced, speculation ran rampant that is would take on the form factor of an SD card. This is not the case. Instead, the Edison has a footprint of 35.5mm x 25.0 mm; just barely larger than an SD card. Dumping this form factor idea is a great idea – instead of being limited to the nine pins present on SD cards and platforms such as the Electric Imp, Intel is using a 70-pin connector to break out a bunch of pins, including an SD card interface, two UARTs, two I²C busses, SPI with two chip selects, I²S, twelve GPIOs with four capable of PWM, and a USB 2.0 OTG controller. There are also a pair of radio modules on this tiny board, making it capable of 802.11 a/b/g/n and Bluetooth 4.0.

The Edison will support Yocto Linux 1.6 out of the box, but because this is an x86 architecture, there is an entire universe of Linux distributions that will also run on this tiny board. It might be theoretically possible to run a version of Windows natively on this module, but this raises the question of why anyone would want to.

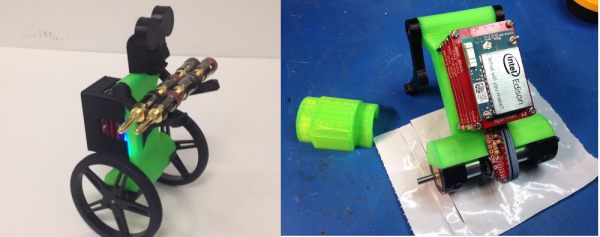

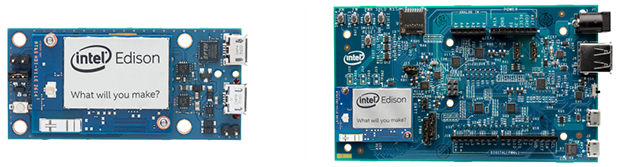

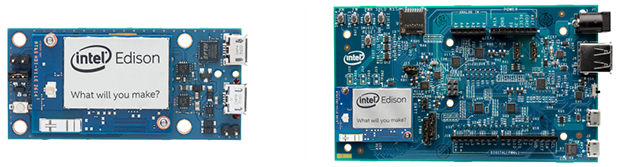

The first round of Edison modules will be used with either a small breakout board that provides basic functionality, solder points, a battery charger power input, and two USB ports (one OTG port), or a larger board Edison board for Arduino that includes the familiar Arduino pin header arrangement and breakouts for everything. The folks at Intel are a generous bunch, and in an effort to put these modules in the next generation of Things for Internet, have included Mouser and Digikey part numbers for the 70-pin header (about $0.70 for quantity one). If you want to create your own breakout board or include Edison in a product design, Edison makes that easy.

There is no word of where or when the Edison will be available. Someone from Intel will be presenting at Maker Faire NYC in less than two weeks, though, and we already have our media credentials. We’ll be sure to get a hands on then. I did grab a quick peek at the Edison while I was in Vegas for Defcon, but I have very little to write about that experience except for the fact that it existed in August.

Update: You can grab an Edison dev kit at Make ($107, with the Arduino breakout) and Sparkfun (link down as of this update never mind, Sparkfun has a ton of boards made for the Edison. It’s pretty cool)

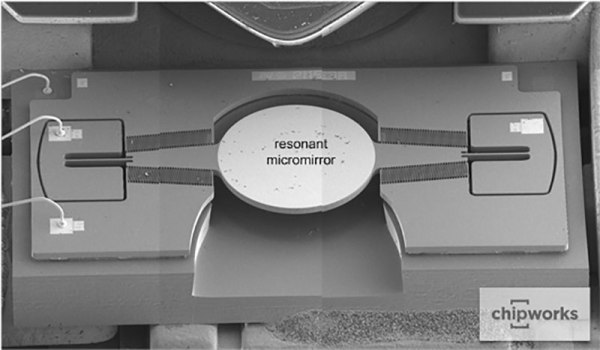

It does this by using an impressive miniaturized projector made of three tiny components: an IR laser, a line lens and a resonant micromirror. The line lens takes the point of light from the IR laser and turns it into a flat horizontal line. This is then bounced off the resonant micromirror, which is twisted by an electrical signal. This micromirror is moved by a torsional drive system, where an electrostatic signal twists the mirror, which is manufactured in a single piece. The system is described in more detail in this PDF of a presentation by the makers, ST Micro. This combination of lens and rapidly moving mirrors creates a pattern of light that is projected, and the reflection is detected by the IR camera on the other side of the module, which is used to create a 3D model that can be used to detect gestures, faces, and other objects. It’s a neat insight into how you can miniaturize things by approaching them in a different way.

It does this by using an impressive miniaturized projector made of three tiny components: an IR laser, a line lens and a resonant micromirror. The line lens takes the point of light from the IR laser and turns it into a flat horizontal line. This is then bounced off the resonant micromirror, which is twisted by an electrical signal. This micromirror is moved by a torsional drive system, where an electrostatic signal twists the mirror, which is manufactured in a single piece. The system is described in more detail in this PDF of a presentation by the makers, ST Micro. This combination of lens and rapidly moving mirrors creates a pattern of light that is projected, and the reflection is detected by the IR camera on the other side of the module, which is used to create a 3D model that can be used to detect gestures, faces, and other objects. It’s a neat insight into how you can miniaturize things by approaching them in a different way.