It was one of those weeks last week at Hackaday’s home office. My mother-in-law handed me her favorite power bank and said “it’s not charging”. She had every expectation that I’ll open it up, desolder the weary pouch inside, scrounge a LiPo out of some corner of the basement, and have it back up and running before the weekend. And of course that’s what happened, although maybe it looks a little worse for wear because it was hard to open the sealed case without excessive force. Sorry about that!

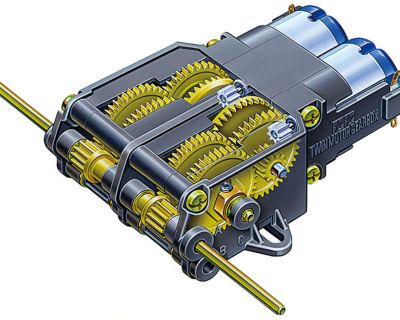

Then on the weekend, I finally got fed up with the decomposing foam on the face seal on my FPV goggles. It was leaking light all over the place. Of course I could have bought a new seal, but then I’d have to wait a week or so for delivery. So I pulled the velcro backing off, tossed it in the bed scanner, pulled the image up in Inkscape, converted it to Gcode, and cut out a couple seals out of EVA foam on the laser. Not only are they essentially indestructible, but I was able to customize them a little bit, and the fit is now better than ever.

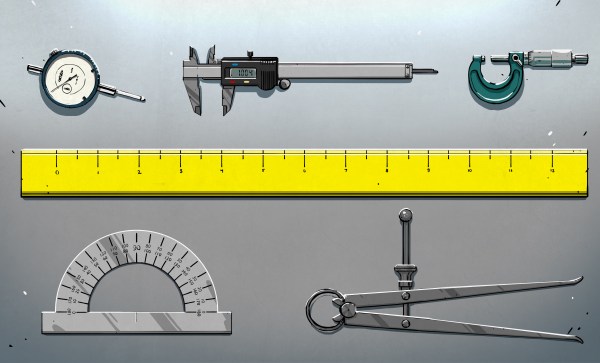

And then, one of our neighbors bought a new garage door fob, flipped the DIP switches into the right configuration, and couldn’t figure out why it wouldn’t open the garage door. Knock knock knock. Using the tried-and-true RF probe that everyone with a scope probe has sitting around, namely hooking the ground pin to the tip and putting the radio device in the loop, it was clear that the sense of the DIP switches was inverted from what it said in the instructions. That was a fun little puzzle.

It was the garage door opener that triggered me to think about how normal people would handle any of these situations. “How do the normies even get by?” were the exact words that went through my head. And let’s face it: we’re not entirely normal. Normal people don’t have a soldering setup just sitting around ready to get hot 24/7, or a scope to diagnose a garage door RF transmitter at the drop of a hat. But these things seem to happen to me all the time. How do the normal people survive? Maybe they all know someone with a scope?

I take it as my service to the world to be “that guy” for most of our friends and family, and I pretty much do it without complaint. “With great power” and all that. My wife is just about as gracious when she’s stuck debugging a parent’s Windows setup, so I’m not saying I’m the only saint in the world, either. Surely you have similar stories.

But last week it made me reflect on how good we’ve got it, and that does make me want to pay it forward a little bit. If you’re one of the people who can, try to help out those who can’t.