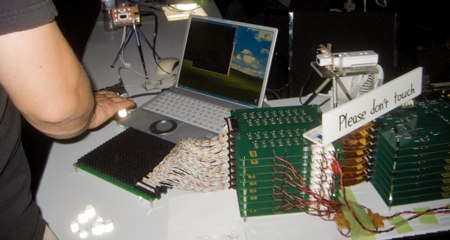

Fresh from this year’s SIGGRAPH is a very interesting take on the traditional X Y-table based CNC machine from [Alec], [Ilan] and [Frédo] at MIT. They created a computer-controlled CNC router that is theoretically unlimited in size. Instead of a gantry, this router uses a human to move the tool over the work piece and only makes fine corrections to the tool path with the help of a camera and stepper motor.

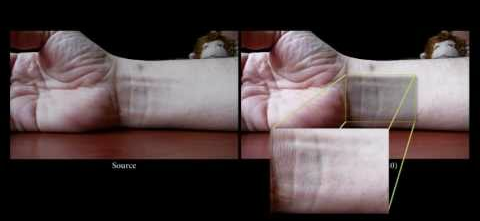

The entire device is built around a hand held router, with a base that contains a camera, electronics, stepper motors, and a very nice screen for displaying the current tool path. After a few strips of QR code-inspired tape, the camera looks down at the work piece and calculates the small changes the router has to make in order to make the correct shape. All the user needs to do is guide the router along the outline of the part to be cut with a margin of error of a half inch.

You can read the SIGGRAPH paper here (or get the PDF here and not melt [Alec]’s server), or check out the demo video after the break.

Anyone want to build their own?