How often have you stood in the supermarket wondering about the inventory level in the fridge at home? [Mike] asked himself this question one time too often and so he decided to install a webcam in his fridge along with a Raspberry Pi and a light sensor to take a picture every time the fridge is opened — uploading it to a webserver for easy remote access.

webcam124 Articles

Small Experiments In DIY Home Security

[Dann Albright] writes about some small experiments he’s done in home security.

He starts with the simplest. Which is to purchase an off the shelf web camera, and hook it up to software built to do the task. The first software he uses is the free, iSpy open source software. This adds basic features like motion detection, time stamping, logging, and an interface. He also explores other commercial options.

Next he delves a bit deeper. He starts by making a simple motion detector. When the Arduino detects motion using a PIR sensor it gets a computer to text an alert. After the tutorial begins to veer a little and he adds his WiFi light bulbs to the mix. Now he can send an email and change the color of the lights.

We suppose, that from a security standpoint. It would really freak a burglar out if all the lights turned red when they walked into a room. Either way, there’s definitely a fun weekend project in playing around with all these systems.

Reverse Engineering A WiFi Security Camera

The Internet of Things is slowly turning into the world’s largest crappy robot, with devices seemingly designed to be insecure, all waiting to be rooted and exploited by anyone with the right know-how. The latest Internet-enabled device to fall is a Motorola Focus 73 outdoor security camera. It’s quite a good camera, save for the software. [Alex Farrant] and [Neil Biggs] found the software was exceptionally terrible and would allow anyone to take control of this camera and install new firmware.

The camera in question is the Motorola Focus 73 outdoor security camera. This camera connects to WiFi, features full pan, tilt, zoom controls, and feeds a live image and movement alerts to a server. Basically, it’s everything you need in a WiFi security camera. Setting up this camera is simple – just press the ‘pair’ button and the camera switches to host mode and sets up an open wireless network. The accompanying Hubble mobile app scans the network for the camera and prompts the user to connect to it. Once the app connects to the camera, the user is asked to select a WiFi connection to the Internet from a list. The app then sends the security key over the open network unencrypted. By this point, just about anyone can see the potential for an exploit here, and since this camera is usually installed outdoors – where anyone can reach it – evidence of idiocy abounds.

Once the camera is on the network, there are a few provisions for firmware upgrades. Usually, firmware upgrades are available by downloading from ‘private’ URLs and sent to the camera with a simple script that passes a URL directly into the shell as root. A few facepalms later, and [Alex] and [Neil] had root access to the camera. The root password was ‘123456’.

While there’s the beginnings of a good Internet of Camera in this product, the design choices for the software are downright stupid. In any event, if you’re looking for a network camera that you own – not a company with a few servers and a custom smartphone app – this would be near the top of the list. It’s a great beginning for some open source camera firmware.

Thanks [Mathieu] for the tip.

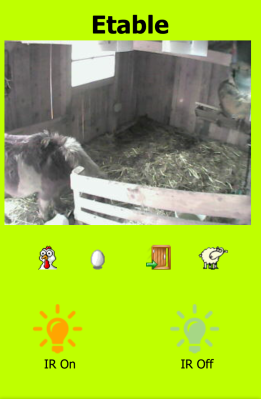

Small-farm Automation Keeps Livestock Safe And Happy

Life down on the farm isn’t easy, and a little technology can go a long way to making things easier for the farmer. It’ll be a while before any farmer can kick back on the beach and run his place from a smartphone, but that’s clearly the direction things are heading with this small farm automation project.

[Vince]’s livestock appears to consist of chickens and sheep at this point, and the fact that they share housing helped him to deploy some tech for both species. The chickens got an automated door that lets them out in the morning and shuts them in safely once they’ve returned to roost for the night – important protection against predators. The door is hoisted by a Somfy window-treatment motor, which seems a little on the overkill side to us; a thrift-store electric drill and a homebrew drum might have worked too. A Teensy with an RTC opens and closes the door according to sunrise and sunset times, and temperature and humidity sensors provide feedback on conditions inside the coop. The sheep benefit from a PTZ webcam to keep an eye on their mischief, and the whole thing is controlled by a custom web interface from [Vince]’s smartphone.

[Vince]’s livestock appears to consist of chickens and sheep at this point, and the fact that they share housing helped him to deploy some tech for both species. The chickens got an automated door that lets them out in the morning and shuts them in safely once they’ve returned to roost for the night – important protection against predators. The door is hoisted by a Somfy window-treatment motor, which seems a little on the overkill side to us; a thrift-store electric drill and a homebrew drum might have worked too. A Teensy with an RTC opens and closes the door according to sunrise and sunset times, and temperature and humidity sensors provide feedback on conditions inside the coop. The sheep benefit from a PTZ webcam to keep an eye on their mischief, and the whole thing is controlled by a custom web interface from [Vince]’s smartphone.

There’s just something about automating chicken coop doors that seems to inspire hackers; check out this nice self-locking design. As for [Vince]’s farm, it looks like his system has a lot of room for expansion – food and water status would be a great next step. We’re looking forward to seeing where he goes from here.

Listen To The Rain, Raspberry Pi Style

There’s an old proverb algebra teachers often recite: You have to use what you know to find out what you don’t know. The same could be said about sensors. For example, analog to digital converters use something computers are good at finding (like time) and use it to determine something they aren’t good at finding (like voltage). So how do you detect rainfall? If you are [lowflyerUK], you use the microphone in your web camera and a Raspberry Pi.

The idea was to reduce irrigation usage based on rainfall, so an exact measurement isn’t necessary. The Python code that analyzes the audio input is calibrated with three configuration parameters and attempts to remove wind noise. Even so, it needs to be in a room that gets a lot of noise from rainfall and ambient noise can throw the reading off.

The weather service is never going to adopt this system. Still, it is a great example of taking something you know and using it to get something you don’t know. If you want a more complete weather station, we have a few options for you.

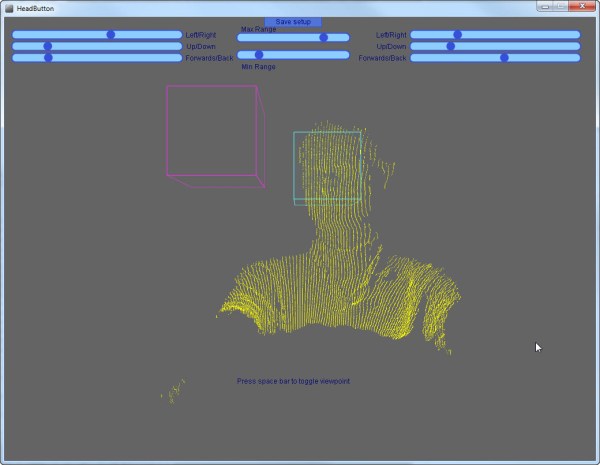

Head Gesture Tracking Helps Limited Mobility Students

There is a lot of helpful technology for people with mobility issues. Even something that can help people do something most of us wouldn’t think twice about, like turn on a lamp or control a computer, can make a world of difference to someone who can’t move around as easily. Luckily, [Matt] has been working on using webcams and depth cameras to allow someone to do just that.

[Matt] found that using webcams instead of depth cameras (like the Kinect) tends to be less obtrusive but are limited in their ability to distinguish individual users and, of course, don’t have the same 3D capability. With either technology, though, the software implementation is similar. The camera can detect head motion and control software accordingly by emulating keystrokes. The depth cameras are a little more user-friendly, though, and allow users to move in whichever way feels comfortable for them.

This isn’t the first time something like a Kinect has been used to track motion, but for [Matt] and his work at Beaumont College it has been an important area of ongoing research. It’s especially helpful since the campus has many things on network switches (like lamps) so this software can be used to help people interact much more easily with the physical world. This project could be very useful to anyone curious about tracking motion, even if they’re not using it for mobility reasons. Continue reading “Head Gesture Tracking Helps Limited Mobility Students”

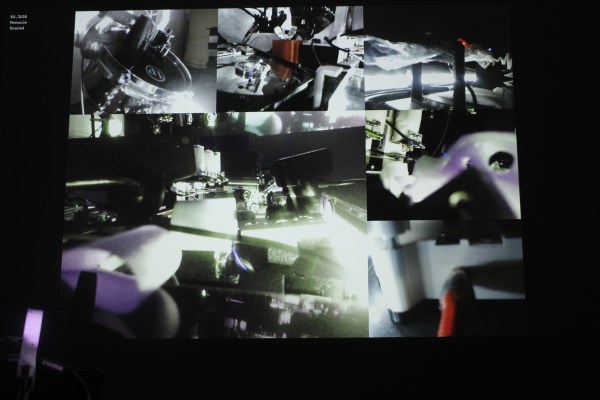

A Non-Infinite But Arbitrariliy Large Number Of Video Feeds

It’s pretty common to grab a USB webcam when you need something monitored. They’re quick and easy now, most are plug-and-play on almost every modern OS, and they’re cheap. But what happens when you need to monitor more than a few things? Often this means lots of cameras and additional expensive hardware to support the powerful software needed, but [moritz simon geist] and his group’s Madcam software can now do the same thing inexpensively and simply.

Many approaches were considered before the group settled on using PCI to handle the video feeds. Obviously using just USB would cause a bottleneck, but they also found that Ethernet had a very high latency as well. They also tried mixing the video feeds from Raspberry Pis, without much success either. Their computer is a pretty standard AMD with 4 GB of RAM running Xubuntu as well, so as long as you have the PCI slots needed there’s pretty much no limit to what you could do with this software.

At first we scoffed at the price tag of around $500 (including the computer that runs the software) but apparently the sky’s the limit for how much you could spend on a commercial system, so this is actually quite the reduction in cost. Odds are you have a desktop computer anyway, and once you get the software from their Github repository you’re pretty much on your way. So far the creators have tested the software with 10 cameras, but it could be expanded to handle more. It would be even cooler if you could somehow incorporate video feeds from radio sources!

Continue reading “A Non-Infinite But Arbitrariliy Large Number Of Video Feeds”