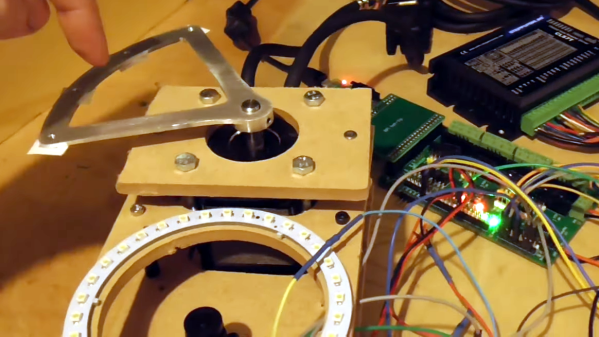

[Jenny List] has been reverse-engineering and redesigning the Single8 home movie film cartridge for the modern age, to breathe life into abandoned cine cameras.

One of the frustrating things about working with technologies that have been with us for a while is the proliferation of standards and the way that once-popular formats can become obsolete over time. This can leave equipment effectively unusable and unloved.

There is perhaps no greater example of this than in film photography – an industry and hobby that has been with us for over 100 years and that has left many cameras orphaned once the film format they relied on was no longer available (Disc film, anyone?).

Thankfully, Hackaday’s own [Jenny List] has been working hard to bring one particular cine film format back from the dead and has just released the fourth instalment in a video series documenting the process of resurrecting the Single8 format cartridge. Continue reading “Re-Inventing The Single 8 Home Movie Format”