A short while ago, Tested posted a video all about hands-on time with virtual reality (VR) headset prototypes from Meta (which is to say, Facebook) and there are some genuinely interesting bits in there. The video itself is over an hour long, but if you’re primarily interested in the technical angles and why they matter for VR, read on because we’ll highlight each of the main points of research.

As absurd as it may seem to many of us to have a social network spearheading meaningful VR development, one can’t say they aren’t taking it seriously. It’s also refreshing to see each of the prototypes get showcased by a researcher who is clearly thrilled to talk about their work. The big dream is to figure out what it takes to pass the “visual Turing test”, which means delivering visuals that are on par with that of a physical reality. Some of these critical elements may come as a bit of a surprise, because they go in directions beyond resolution and field-of-view.

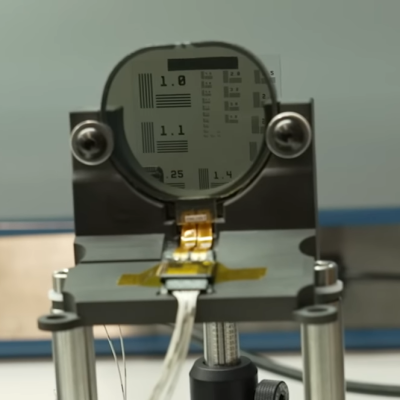

At 9:35 in on the video, [Douglas Lanman] shows [Norman Chan] how important variable focus is to delivering a good visual experience, followed by a walk-through of all the different prototypes they have used to get that done. Currently, VR headsets display visuals at only one focal plane, but that means that — among other things — bringing a virtual object close to one’s eyes gets blurry. (Incidentally, older people don’t find that part very strange because it is a common side effect of aging.)

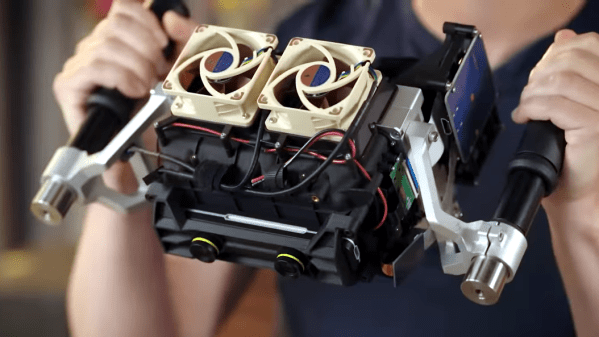

The solution is to change focus based on where the user is looking, and [Douglas] shows off all the different ways this has been explored: from motors and actuators that mechanically change the focal length of the display, to a solid-state solution composed of stacked elements that can selectively converge or diverge light based on its polarization. [Doug]’s pride and excitement is palpable, and he really goes into detail on everything.

At the 30:21 mark, [Yang Zhao] explains the importance of higher resolution displays, and talks about lenses and optics as well. Interestingly, the ultra-clear text rendering made possible by a high-resolution display isn’t what ended up capturing [Norman]’s attention the most. When high resolution was combined with variable focus, it was the textures on cushions, the vividness of wall art, and the patterns on walls that [Norman] found he just couldn’t stop exploring.

Continue reading “VR Prototypes Reveal Facebook’s Surprisingly Critical Research Directions”

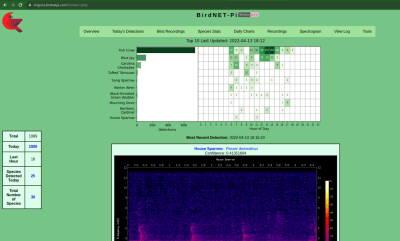

About that Raspberry Pi version! There’s a sister project called

About that Raspberry Pi version! There’s a sister project called