A copy stand is a tool used to capture images of photos, artwork, books, and things of a similar nature. It holds a camera perpendicular to a large and flat surface, upon which the subject rests.

They are handy, but there’s no need to spend a lot when [BlandPasta]’s DIY copy stand based on a cheap IKEA LACK table can be turned into an economical afternoon project with the help of simple hardware and a few 3D printed parts.

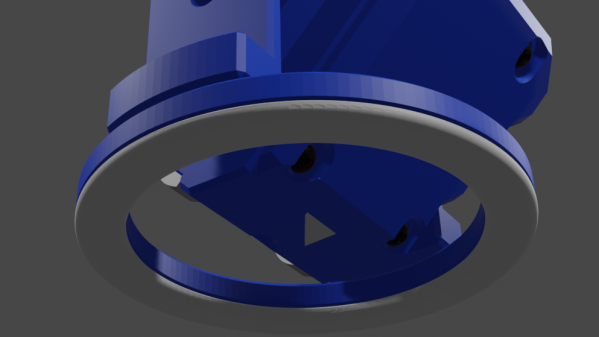

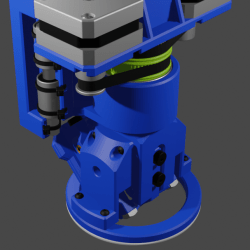

The main structure comes from a mixture of parts from two LACK tables: one small and one normal-sized. A tabletop is used as the bed, and the square legs make up the structural parts with the help of some printed pieces. A threaded rod combined with some captive hardware provides a way to adjust the camera up and down with a crank, while one can manually slide the horizontal camera mount as needed to frame the subject appropriately.

This is a clever remix of IKEA parts, and the somewhat matte white finish of the LACK complements photography well. Adding some DIY LED lighting is about all it takes to get a perfectly serviceable copy stand that won’t break the bank.