[BioBootloader] combined Python and a hefty dose of of AI for a fascinating proof of concept: self-healing Python scripts. He shows things working in a video, embedded below the break, but we’ll also describe what happens right here.

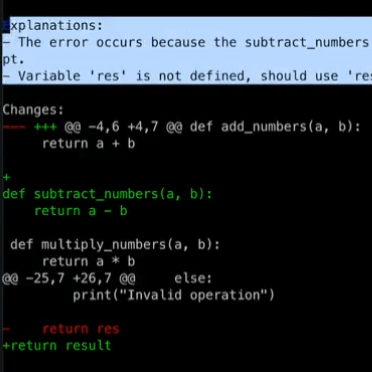

The demo Python script is a simple calculator that works from the command line, and [BioBootloader] introduces a few bugs to it. He misspells a variable used as a return value, and deletes the subtract_numbers(a, b) function entirely. Running this script by itself simply crashes, but using Wolverine on it has a very different outcome.

Wolverine is a wrapper that runs the buggy script, captures any error messages, then sends those errors to GPT-4 to ask it what it thinks went wrong with the code. In the demo, GPT-4 correctly identifies the two bugs (even though only one of them directly led to the crash) but that’s not all! Wolverine actually applies the proposed changes to the buggy script, and re-runs it. This time around there is still an error… because GPT-4’s previous changes included an out of scope return statement. No problem, because Wolverine once again consults with GPT-4, creates and formats a change, applies it, and re-runs the modified script. This time the script runs successfully and Wolverine’s work is done.

LLMs (Large Language Models) like GPT-4 are “programmed” in natural language, and these instructions are referred to as prompts. A large chunk of what Wolverine does is thanks to a carefully-written prompt, and you can read it here to gain some insight into the process. Don’t forget to watch the video demonstration just below if you want to see it all in action.

While AI coding capabilities definitely have their limitations, some of the questions it raises are becoming more urgent. Heck, consider that GPT-4 is barely even four weeks old at this writing.

Continue reading “Wolverine Gives Your Python Scripts The Ability To Self-Heal”