In 2016, a Tesla Model S T-boned a tractor trailer at full speed, killing its lone passenger instantly. It was running in Autosteer mode at the time, and neither the driver nor the car’s automatic braking system reacted before the crash. The US National Highway Traffic Safety Administration (NHTSA) investigated the incident, requested data from Tesla related to Autosteer safety, and eventually concluded that there wasn’t a safety-related defect in the vehicle’s design (PDF report).

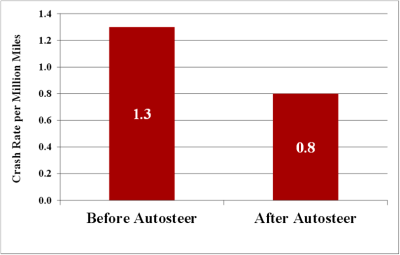

But the NHTSA report went a step further. Based on the data that Tesla provided them, they noted that since the addition of Autosteer to Tesla’s confusingly named “Autopilot” suite of functions, the rate of crashes severe enough to deploy airbags declined by 40%. That’s a fantastic result.

But the NHTSA report went a step further. Based on the data that Tesla provided them, they noted that since the addition of Autosteer to Tesla’s confusingly named “Autopilot” suite of functions, the rate of crashes severe enough to deploy airbags declined by 40%. That’s a fantastic result.

Because it was so spectacular, a private company with a history of investigating automotive safety wanted to have a look at the data. The NHTSA refused because Tesla claimed that the data was a trade secret, so Quality Control Systems (QCS) filed a Freedom of Information Act lawsuit to get the data on which the report was based. Nearly two years later, QCS eventually won.

Looking into the data, QCS concluded that crashes may have actually increased by as much as 60% on the addition of Autosteer, or maybe not at all. Anyway, the data provided the NHTSA was not sufficient, and had bizarre omissions, and the NHTSA has since retracted their safety claim. How did this NHTSA one-eighty happen? Can we learn anything from the report? And how does this all align with Tesla’s claim of better-than-average safety line up? We’ll dig into the numbers below.

But if nothing else, Tesla’s dramatic reversal of fortune should highlight the need for transparency in the safety numbers of self-driving and other advanced car technologies, something we’ve been calling for for years now.

Continue reading “Does Tesla’s Autosteer Make Cars Less Safe?”