Neural networks are all the rage right now with increasing numbers of hackers, students, researchers, and businesses getting involved. The last resurgence was in the 80s and 90s, when there was little or no World Wide Web and few neural network tools. The current resurgence started around 2006. From a hacker’s perspective, what tools and other resources were available back then, what’s available now, and what should we expect for the future? For myself, a GPU on the Raspberry Pi would be nice.

Software Hacks1034 Articles

Half Baked IoT Stove Could Be Used As A Remote Controlled Arson Device

[Pen Test Partners] have found some really scary vulnerabilities in AGA range cookers. They are connected by SMS by which a mobile app sends an unauthenticated SMS to the AGA to give it commands for instance preheat the oven, You can also just tell your AGA to turn everything on at once.

The problem is with the web interface; it allows an attacker to check if a user’s cell phone is already registered, allowing for a slow but effective enumeration attack. Once the attacker finds a registered device, all they need to do is send an SMS, as messages are not authenticated by the cooker, neither is the SIM card set up to send the messages validated when registered.

This is quite disturbing, What if someone left a tea towel on the hob or some other flammable material before leaving for work, only to come back to a pile of ashes? This is a six-gazillion BTU stove and oven, after all. It just seems the more connected we are in this digital age the more we end up vulnerable to attacks, companies seem too busy trying to push their products out the door to do simple security checks.

Before disclosing the vulnerability, [Pen Test Partners] tried to contact AGA through Twitter and ended up being blocked. They phoned around trying to get in contact with someone who even knew what IoT or security meant. This took some time but finally they managed to get through to someone from the technical support. Hopefully AGA will roll out some updates soon. The company’s reluctance to do something about this security issue does highlight how sometimes disclosure may not be enough.

[Via Pen Test Partners]

Introduction To TensorFlow

I had great fun writing neural network software in the 90s, and I have been anxious to try creating some using TensorFlow.

Google’s machine intelligence framework is the new hotness right now. And when TensorFlow became installable on the Raspberry Pi, working with it became very easy to do. In a short time I made a neural network that counts in binary. So I thought I’d pass on what I’ve learned so far. Hopefully this makes it easier for anyone else who wants to try it, or for anyone who just wants some insight into neural networks.

Learn Neural Network And Evolution Theory Fast

[carykh] has a really interesting video series which can give a beginner or a pro a great insight into how neural networks operate and at the same time how evolution works. You may remember his work creating a Bach audio producing neural network, and this series again shows his talent at explaining the complex topic so anyone may understand.

He starts with 1000 “creatures”. Each has an internal clock which acts a bit like a heart beat however does not change speed throughout the creature’s life. Creatures also have nodes which cause friction with the ground but don’t collide with each other. Connecting the nodes are muscles which can stretch or contract and have different strengths.

At the beginning of the simulation the creatures are randomly generated along with their random traits. Some have longer/shorter muscles, while node and muscle positions are also randomly selected. Once this is set up they have one job: move from left to right as far as possible in 15 seconds.

Each creature has a chance to perform and 500 are then selected to evolve based on how far they managed to travel to the right of the starting position. The better the creature performs the higher the probability it will survive, although some of the high performing creatures randomly die and some lower performers randomly survive. The 500 surviving creatures reproduce asexually creating another 500 to replace the population that were killed off.

The simulation is run again and again until one or two types of species start to dominate. When this happens evolution slows down as the gene pool begins to get very similar. Occasionally a breakthrough will occur either creating a new species or improving the current best species leading to a bit of a competition for the top spot.

We think the series of four short YouTube videos (all around 5 mins each) that kick off the series demonstrate neural networks in a very visual way and make it really easy to understand. Whether you don’t know much about neural networks or you do and want to see something really cool, these are worthy of your time.

Continue reading “Learn Neural Network And Evolution Theory Fast”

Chess AI, Old School

People have been interested in chess-playing computers before there were any chess-playing computers. In a 1950 paper, [Claude Shannon] defined two major chess-playing strategies. Apparently, practical chess programs still use the techniques he outlined. If you’ve ever wondered how to make a computer play chess [FreeCodeCamp] has an interesting post that walks you through building a chess engine step-by-step.

The code is in JavaScript, but the approach struck us as old school. However, it is interesting to watch the evolution of code as you go from random moves, to slightly smarter strategy, to deeper searching. Because it is in JavaScript, you can follow along in your browser and find out when the program gets smart enough to beat you. The final version is even on GitHub.

Ten Minute TensorFlow Speech Recognition

Like a lot of people, we’ve been pretty interested in TensorFlow, the Google neural network software. If you want to experiment with using it for speech recognition, you’ll want to check out [Silicon Valley Data Science’s] GitHub repository which promises you a fast setup for a speech recognition demo. It even covers which items you need to install if you are using a CUDA GPU to accelerate processing or if you aren’t.

Another interesting thing is the use of TensorBoard to visualize the resulting neural network. This tool offers up a page in your browser that lets you visualize what’s really going on inside the neural network. There’s also speech data in the repository, so it is practically a one-stop shop for getting started. If you haven’t seen TensorBoard in action, you might enjoy the video from Google, below.

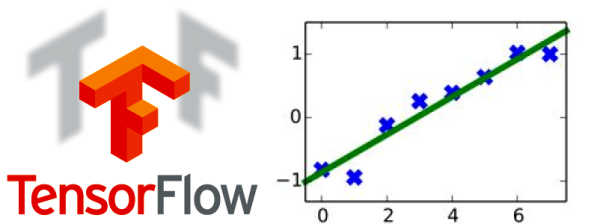

Google Machine Learning Made Simple(r)

If you’ve looked at machine learning, you may have noticed that a lot of the examples are interesting but hard to follow. That’s why [Jostmey] created Naked Tensor, a bare-minimum example of using TensorFlow. The example is simple, just doing some straight line fits on some data points. One example shows how it is done in series, one in parallel, and another for an 8-million point dataset. All the code is in Python.

If you haven’t run into it yet, TensorFlow is an open source library from Google. To quote from its website:

TensorFlow is an open source software library for numerical computation using data flow graphs. Nodes in the graph represent mathematical operations, while the graph edges represent the multidimensional data arrays (tensors) communicated between them. The flexible architecture allows you to deploy computation to one or more CPUs or GPUs in a desktop, server, or mobile device with a single API. TensorFlow was originally developed by researchers and engineers working on the Google Brain Team within Google’s Machine Intelligence research organization for the purposes of conducting machine learning and deep neural networks research, but the system is general enough to be applicable in a wide variety of other domains as well.