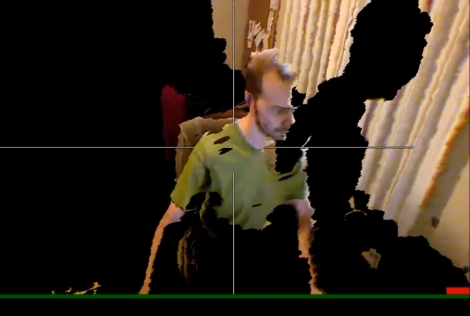

Those of us that remember when you could actually go to a mall and play on a VR game machine, tend to remember it fondly. What happened? The computing horsepower has grown so much, our graphics now days are simply stunning, yet there’s been no major VR revival. Yeah, those helmets were huge and gave you a headache, but it was worth it. With the 3d positioning abilities of the latest game crazes, the Wiimote and the Kinect, [Nao_u] is finally taking this where we all knew it should have gone(google translated). Well, maybe we would have had less creepy anime faces flying around squirting ink, but the basics are there. He has created a VR system utilizing the Wiimote for his hand position, a Vuzix display for head positioning, and the kinect for body tracking. Even with the creepy flying heads I want to play it, especially after seeing him physically ducking behind boxes in the video after the break. Long live VR!

Continue reading “VR! Now With More Kinect, Wiimote, And Vuzix”