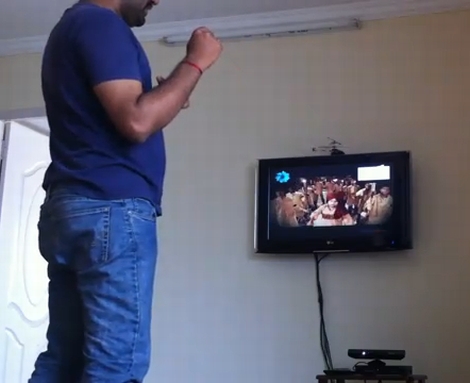

[Harishankar] has posted a video on his blog demonstrating the ability to control devices using the Microsoft Kinect sensor via IR. While controlling devices with Kinect is nothing new, he is doing something a little different than you have seen before. The Kinect directly interfaces with his Mac Mini and tracks his movements via OpenNI. These movements are then compared to a list of predefined gestures, which have been mapped to specific IR functions for controlling his home theater.

Once the gestures have been acknowledged, they are then relayed from the Mac via a USB-UIRT to various home theater components. While there are not a lot of details fleshed out in the blog post, [Harishankar] says he will gladly forward his code to you if you request it via email.

Thanks to [Peter] for the tip.