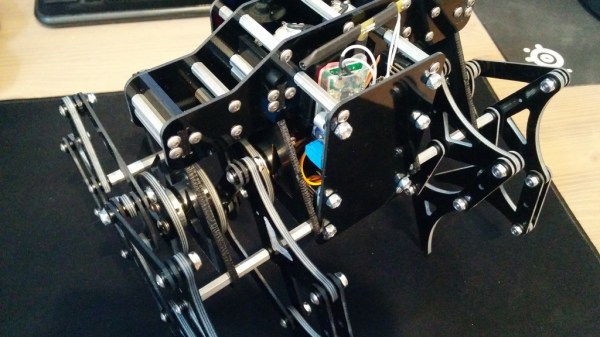

Theo Jansen’s Strandbeest design is a favorite and for good reason; the gliding gait is mesmerizing and this RC version by [tosjduenfs] is wonderful to behold. Back in 2015 the project first appeared on Thingiverse, and was quietly updated last year with a zip file containing the full assembly details.

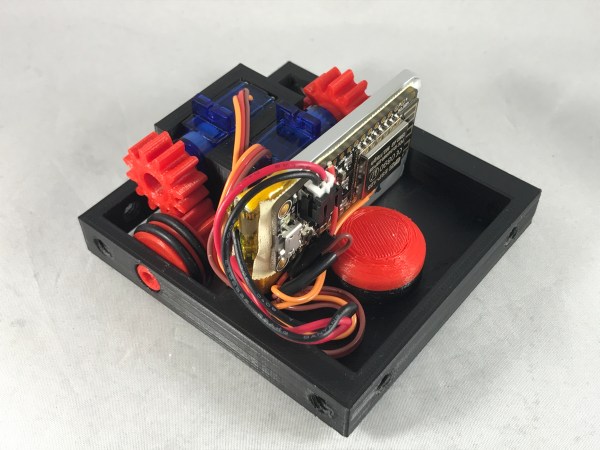

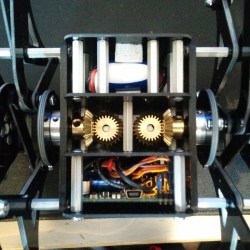

All Strandbeest projects — especially steerable ones — are notable because building one is never a matter of simply scaling parts up or down. For one thing, the classic Strandbeest design doesn’t provide any means of steering. Also, while motorizing the system is simple in concept it’s less so in practice; there’s no obvious or convenient spot to actually mount a motor in a Strandbeest. In this project bevel gears are used to mount the motors vertically in a central area, and the left and right sides are driven independently like a tank. A motor driver that accepts RC signals allows the use of an off the shelf RC transmitter and receiver to control the unit. There is a wonderful video of the machine zipping around smoothly, embedded below.