Debugging might be the one thing that separates “modern” programming from “classic” programming. If you are on an old enough computer — or maybe one that has limited tools like some microcontrollers — debugging is largely an intellectual exercise. Try a program, observe its behavior, and then try again. You can liberally sprinkle print statements around if you have an output device or turn on LEDs or digital outputs if you don’t. But with a debugger, you can get a bird’s-eye view of your program’s data and execution flow.

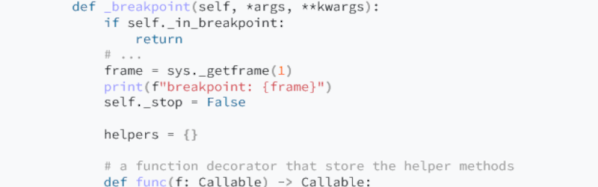

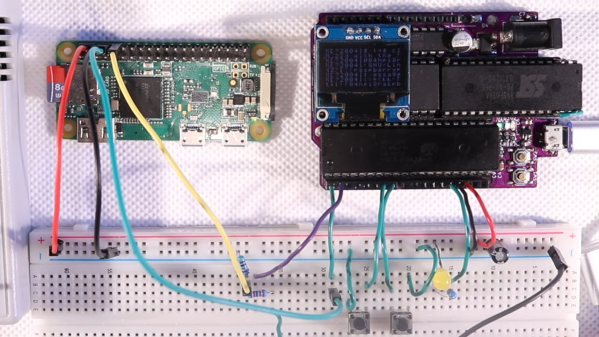

For some languages, writing a debugger can be hard — you usually use at least some system facility to get started. But as [mostlynerdness] shows, Python’s interpreter wants to help you create your own debugger, and you can follow along to see how it’s done. This is accessible because Python has a built-in debugging core that you can use and extend. Well, regular Python, that is. MicroPython has some low-level support, and while we’ve seen attempts to add more, we haven’t tried it.

Of course, you may never need to build your own debugger — most of the IDEs have already done this for you, and some of the code is, in fact, lifted from an open code base and simplified. However, understanding how the debugging plumbing works may give you a leg up if you need to create custom logic to trap an error that would be difficult to find with a generic debugger. Plus, it is just darn interesting.

Like many Python things, there are some version sensitivities. The post is in four parts, with the last two dealing with newer API changes.

We can’t promise that Python can debug your hardware, though. We always thought the C preprocessor was subject to abuse, but it turns out that Python has the same problem.