Since his nerves were wracked by presenting his project to an absurdly large crowd at this year’s SIGGRAPH, [James] is finally ready to share his method of mixing fluids via optical tomography with a much larger audience: the readership of Hackaday.

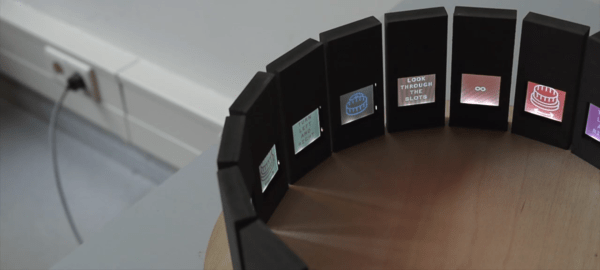

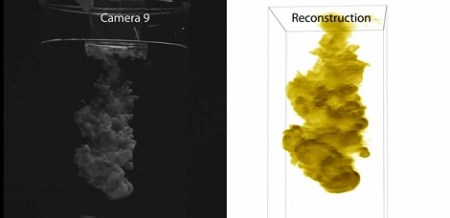

[James]’ project focuses on the problem of modeling mixing liquids from a multi-camera setup. The hardware is fairly basic, just 16 consumer-level video cameras arranged in a semicircle around a glass beaker full of water.

When [James] injects a little dye into the water, the diffusing cloud is captured by a handful of Sony camcorders. The images from these camcorders are sent through an algorithm that selects one point in the cloud and performs a random walk to find every other point in the cloud of liquid dye.

The result of all this computation is a literal volumetric cloud, allowing [James] to render, slice, and cut the cloud of dye any way he chooses. You can see the videos produced from this very cool build after the break.

Continue reading “Visualizing Water Droplets And Building A CT Scanner”