At first we thought it was awesome, then we thought it was ridiculous, and now we’re pretty much settled on “ridiculawesome”.

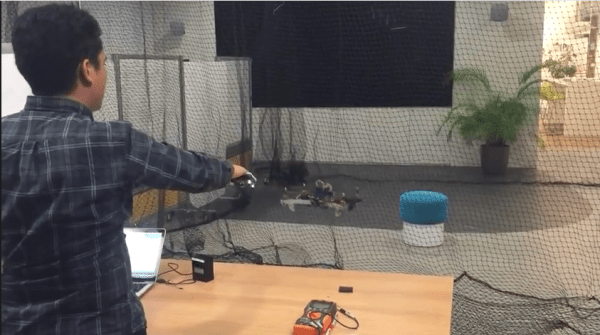

Bitdrones is a prototype of a human-computer interaction that uses tiny quadcopters as pixels in a 3D immersive display. That’s the super-cool part. “PixelDrones” have an LED on top. “ShapeDrones” have a gauzy cage that get illuminated by color LEDs, making them into life-size color voxels. (Cool!) Finally, a “DisplayDrone” has a touchscreen mounted to it. A computer tracks each drone’s location in the room, and they work together to create a walk-in 3D “display”. So far, so awesome.

It gets even better. Because the program that commands the drones knows where each drone is, it can tell when you’ve moved a drone around in space. That’s extremely cool, and opens up the platform to new interactions. And the DisplayDrone is like a tiny flying cellphone, so you can chat hands-free with your friends who hover around your room. Check out the video embedded below the break.

Continue reading “BitDrones Are Awesome, Ridiculous At Same Time”