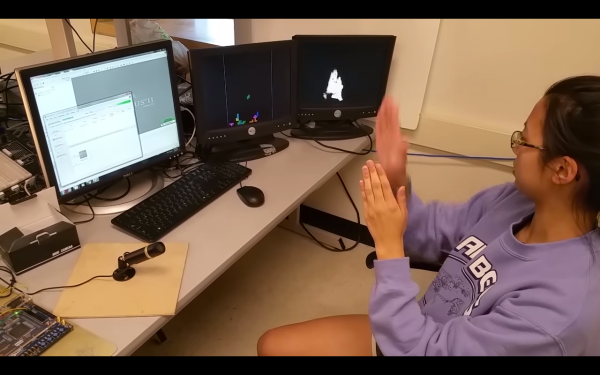

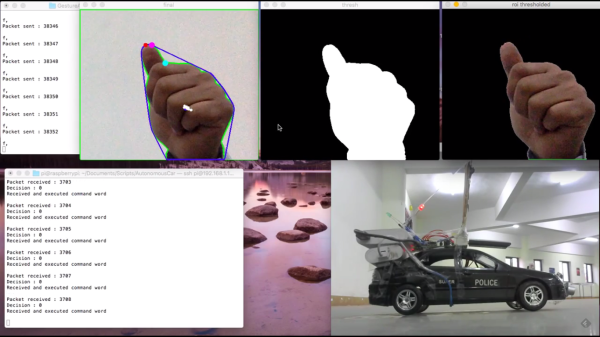

Movies love to show technology they can’t really build yet. Even in 2001: A Space Oddessy (released in 1968), for example, the computer screens were actually projected film. The tablet they used to watch the news looks like something you could pick up at Best Buy this afternoon. [CircuitDigest] saw Iron Man and that inspired him to see if he could control his PC through gestures as they do on that film and so many others (including Minority Report). Although he calls it “virtual reality,” we think of VR as being visually immersed and this is really just the glove, but it is still cool.

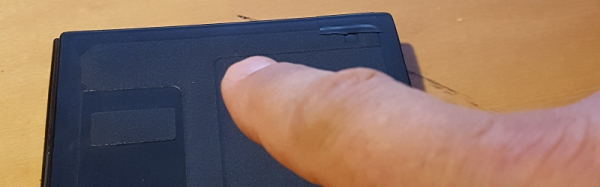

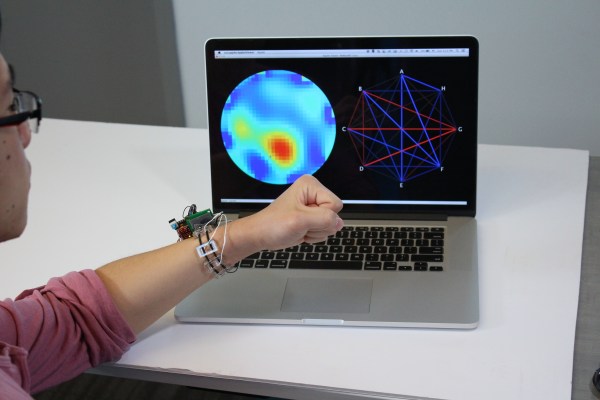

The project uses an Arduino on the glove and Processing on the PC. The PC has a webcam which tracks the hand motion and the glove has two Hall effect sensors to simulate mouse clicks. Bluetooth links the glove and the PC. You can see a video of the thing in action, below.

Continue reading “A Minority Report Arduino-Based Hand Controller”