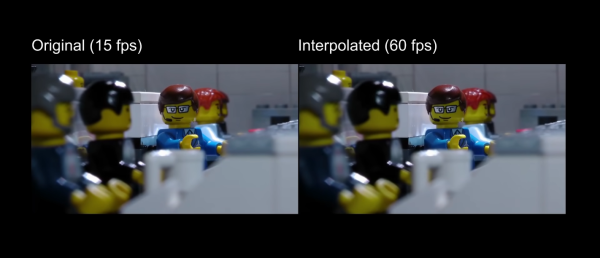

The uses of artificial intelligence and machine learning continue to expand, with one of the more recent implementations being video processing. A new method can “fill in” frames to smooth out the appearance of the video, which [LegoEddy] was able to use this in one of his animated LEGO movies with some astonishing results.

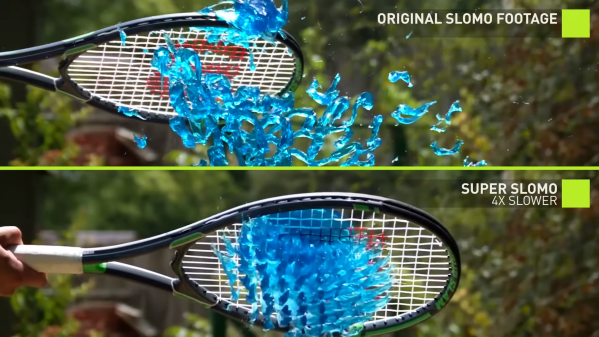

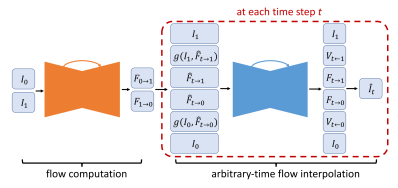

His original animation of LEGO figures and sets was created at 15 frames per second. As an animator, he notes that it’s orders of magnitude more difficult to get more frames than this with traditional methods, at least in his studio. This is where the artificial intelligence comes in. The program is able to interpolate between frames and create more frames to fill the spaces between the original. This allowed [LegoEddy] to increase his frame rate from 15 fps to 60 fps without having to actually create the additional frames.

While we’ve seen AI create art before, the improvement on traditionally produced video is a dramatic advancement. Especially since the AI is aware of depth and preserves information about the distance of objects from the camera. The software is also free, runs on any computer with an appropriate graphics card, and is available on GitHub.