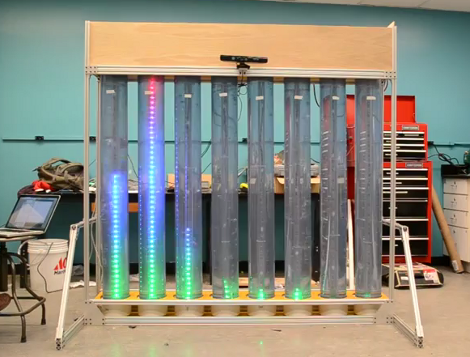

This is the senior design project for a group at the University of Vermont. It’s a wet, bubbly, blinky, interactive thing. Each column is a clear tube filled with water, with a string of fully addressable RGB LEDs suspended in the center. In idle mode, the lights scroll through a series of interesting patterns while the water is filled with bubbles to add some depth to the presentation. There is also a VU meter function, as seen here and during the Portal theme song that ends the video demo after the break.

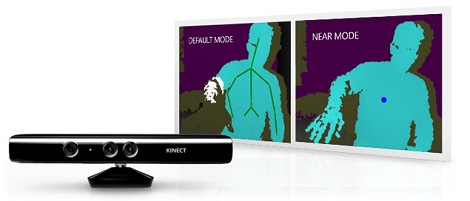

A Teensy++ board is used to address the display. It’s set up to receive serial commands from a Processing script which is responsible for generating the animations. At the top of the frame you can see there’s a Kinect sensor. By standing in the standard post (we think it should be called the Kinect mug shot) the installation will automatically switch over to body control. We could see this thing making its way into a long airplane terminal hallway, following the travelers along their trek from one terminal to the next.