A zoetrope is a device that contains a disk full with a series of images that make up and animation. A couple of different methods can be used to trick the eye into seeing a single animated image. In the past this was done by placing the images inside of a cylinder with slits at regular distances. When spun quickly, the slits appear to be stationary, with the images creating the animation. But the same effect can be accomplished using a strobe light.

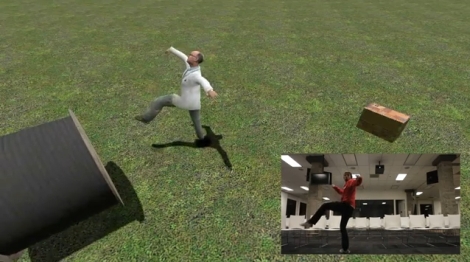

The disk you see above uses the strobe method, but it’s design and construction is what caught our eye. The animated shapes were captured with a Kinect and isolated using Processing. [Greg Borenstein] takes a depth movie recorded while someone danced in front of a Kinect. He ran it through a Processing sketch and was able to isolate a set of slides that where then turned into the objects seen above using a laser cutter.

You can watch a video of this particular zoetrope after the break. But we’ve also embedded the Pixal 3D zoetrope clip which, although unrelated to this hack, is extremely interesting. Don’t have a laser cutter to try this out yourself? You could always build a zoetrope that uses a printed disk.

Continue reading “Building A Zoetrope Using Kinect, Processing, And A Laser Cutter”