Join us on Wednesday, February 19 at noon Pacific for the Open-Source Neuroscience Hardware Hack Chat with Dr. Alexxai Kravitz and Dr. Mark Laubach!

There was a time when our planet still held mysteries, and pith-helmeted or fur-wrapped explorers could sally forth and boldly explore strange places for what they were convinced was the first time. But with every mountain climbed, every depth plunged, and every desert crossed, fewer and fewer places remained to be explored, until today there’s really nothing left to discover.

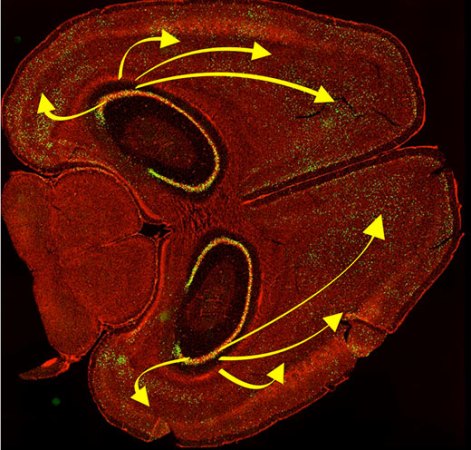

Unless, of course, you look inward to the most wonderfully complex structure ever found: the brain. In humans, the 86 billion neurons contained within our skulls make trillions of connections with each other, weaving the unfathomably intricate pattern of electrochemical circuits that make you, you. Wonders abound there, and anyone seeing something new in the space between our ears really is laying eyes on it for the first time.

But the brain is a difficult place to explore, and specialized tools are needed to learn its secrets. Lex Kravitz, from Washington University, and Mark Laubach, from American University, are neuroscientists who’ve learned that sometimes you have to invent the tools of the trade on the fly. While exploring topics as wide-ranging as obesity, addiction, executive control, and decision making, they’ve come up with everything from simple jigs for brain sectioning to full feeding systems for rodent cages. They incorporate microcontrollers, IoT, and tons of 3D-printing to build what they need to get the job done, and they share these designs on OpenBehavior, a collaborative space for the open-source neuroscience community.

Join us for the Open-Source Neuroscience Hardware Hack Chat this week where we’ll discuss the exploration of the real final frontier, and find out what it takes to invent the tools before you get to use them.

Our Hack Chats are live community events in the Hackaday.io Hack Chat group messaging. This week we’ll be sitting down on Wednesday, February 19 at 12:00 PM Pacific time. If time zones have got you down, we have a handy time zone converter.

Our Hack Chats are live community events in the Hackaday.io Hack Chat group messaging. This week we’ll be sitting down on Wednesday, February 19 at 12:00 PM Pacific time. If time zones have got you down, we have a handy time zone converter.

Click that speech bubble to the right, and you’ll be taken directly to the Hack Chat group on Hackaday.io. You don’t have to wait until Wednesday; join whenever you want and you can see what the community is talking about. Continue reading “Open-Source Neuroscience Hardware Hack Chat”

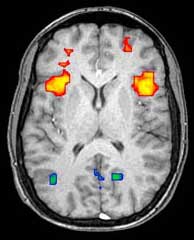

The current research tool du jour in the field of neuroscience and psychology is the fMRI, or functional magnetic resonance imaging. It’s basically the same as the MRI machine found in any well equipped hospital, but with a key difference: it can detect very small variances in the blood oxygen levels, and thus areas of activity in the brain. Why is this important? For researchers, finding out what area of the brain is active in response to certain stimuli is a ticket to Tenure Town with stops at Publicationton and Grantville.

The current research tool du jour in the field of neuroscience and psychology is the fMRI, or functional magnetic resonance imaging. It’s basically the same as the MRI machine found in any well equipped hospital, but with a key difference: it can detect very small variances in the blood oxygen levels, and thus areas of activity in the brain. Why is this important? For researchers, finding out what area of the brain is active in response to certain stimuli is a ticket to Tenure Town with stops at Publicationton and Grantville. The project featured in this post is

The project featured in this post is