[Peter]’s homebuilt ultralight is actually flying now and not in ground effect, much to the chagrin of YouTube commenters. [Peter Sripol] built a Part 103 ultralight (no license required, any moron can jump in one and fly) in his basement out of foam board from Lowes. Now, he’s actually doing flight testing, and he managed to build a good plane. Someone gifted him a ballistic parachute so the GoFundMe for the parachute is unneeded right now, but this gift parachute is a bit too big for the airframe. Not a problem; he’ll just sell it and buy the smaller model.

Last week, rumors circulated of Broadcom acquiring Qualcomm for the sum of One… Hundred… Billion Dollars. It looks like that’s not happening now. Qualcomm rejected a deal for $103B, saying the offer, ‘undervalued the company and would face regulatory hurdles.’ Does this mean the deal is off? No, there are 80s guys out there who put the dollar signs in Busine$$, and there’s politicking going on.

A few links posts ago, I pointed out there were some very fancy LED panels available on eBay for very cheap. The Barco NX-4 LED panels are a 32×36 panels of RGB LEDs, driven very quickly by some FPGA goodness. The reverse engineering of these panels is well underway, and [Ian] and his team almost have everything figured out. Glad I got my ten panels…

TechShop is gone. With a heavy heart, we bid adieu to a business with a whole bunch of tools anyone can use. This leaves a lot of people with TechShop memberships out in the cold, and to ease the pain, Glowforge, Inventables, Formlabs, and littleBits are offering some discounts so you can build a hackerspace in your garage or basement. In other TechShop news, the question on everyone’s mind is, ‘what are they going to do with all the machines?’. Nobody knows, but the smart money is a liquidation/auction. Yes, in a few months, you’ll probably be renting a U-Haul and driving to TechShop one last time.

3D Hubs has come out with a 3D Printing Handbook. There’s a lot in the world of filament-based 3D printing that isn’t written down. It’s all based on experience, passed on from person to person. How much of an overhang can you really get away with? How do you orient a part correctly? God damned stringing. How do you design a friction-fit between two parts? All of these techniques are learned by experience. Is it possible to put this knowledge in a book? I have no idea, so look for that review in a week or two.

Like many of us, I’m sure, [Adam] is a collector of vintage computers. Instead of letting them sit in the attic, he’s taking gorgeous pictures of them. The collection includes most of the big-time Atari and Commodore 8-bitters, your requisite Apples, all of the case designs of the all-in-one Macs, some Pentium-era PCs, and even a few of the post-97 Macs. Is that Bondi Blue? Bonus points: all of these images are free to use with attribution.

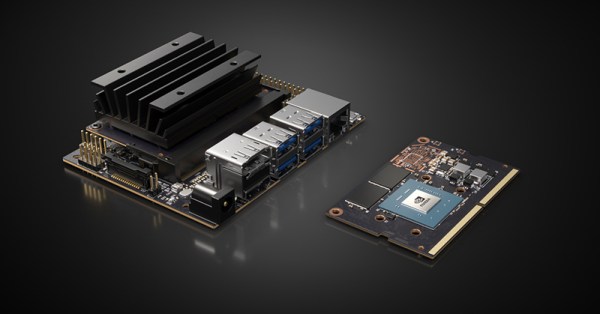

Nvidia is blowing out their TX1 development kits. You can grab one for $200. What’s the TX1? It’s a really, really fast ARM computer stuffed into a heat sink that’s about the size of a deck of cards. You can attach it to a MiniITX breakout board that provides you with Ethernet, WiFi, and a bunch of other goodies. It’s a step above the Raspberry Pi for sure and is capable enough to run as a normal desktop computer.