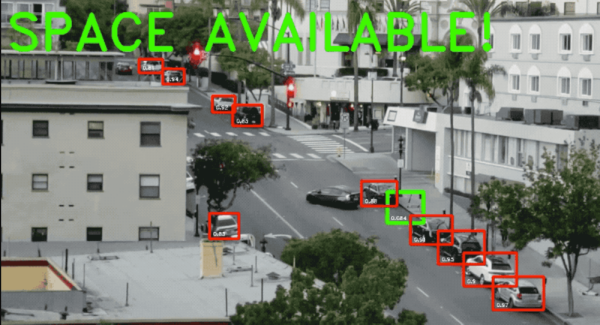

If you live in a bustling city and have anyone over who drives, it can be difficult for them to find parking. Maybe you have an assigned space, but they’re resigned to circling the block with an eagle eye. With those friends in mind, [Adam Geitgey] wrote a Python script that takes the video feed from a web cam and analyzes it frame by frame to figure out when a street parking space opens up. When the glorious moment arrives, he gets a text message via Twilio with a picture of the void.

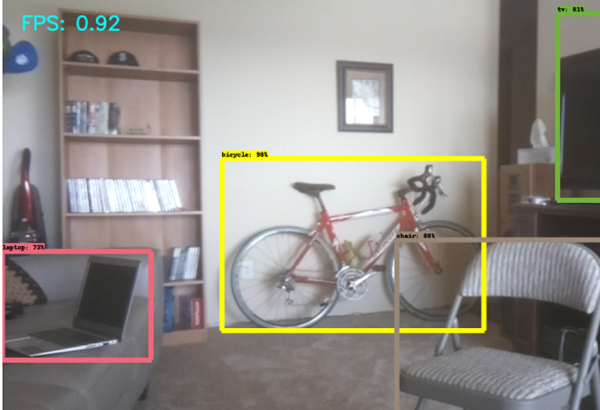

It sounds complicated, but much of the work has already been done. Cars are a popular target for machine learning, so large data sets with cars already exist. [Adam] didn’t have to train a neural network, either–he found a pre-trained Mask R-CNN model with data for 80 common objects like people, animals, and cars.

The model gives a lot of useful info, including a bounding box for each car with pixel coordinates. Since the boxes overlap, there needs be a way to determine whether there’s really a car in the space, or just the bumpers of other cars. [Adam] used intersection over union to do this, which is conveniently available as a function of the Mask R-CNN model’s library. The function returns a score, so it was just a matter of ignoring low-scoring bounding boxes.

[Adam] purposely made the script adaptable. A few changes here and there, and you could be picking up tennis balls with a robotic collector or analyzing human migration patterns on your block in no time. Or change it up and detect all the cars that run the stop sign by your house.

Thanks for the tip, [foamyguy].