At first blush, it might seem like projects that make extensive use of computer vision or machine learning would need to be based on powerful computing platforms with plenty of clock cycles and memory to handle this type of application. While there is some truth to this, as the field progresses it becomes possible to experiment with these tools on low-power devices as well. Take this OpenCV project which is built entirely on an ESP32 for example.

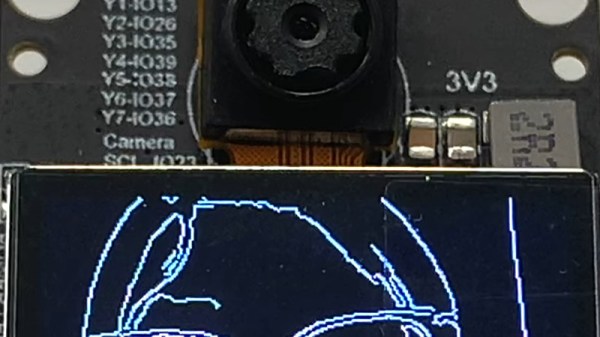

With that being said, there are some modifications that need to be made to the ESP32 in order to use OpenCV in any meaningful way. The most important of these is the use of the ESP32-DOWDQ6 module which increases the available memory of the ESP32 to allow it to make better use of camera functions. Even then, the ESP32 can’t run the entire OpenCV application, so a shrunken version of OpenCV is required before the device can run it natively. Once those two obstacles are out of the way, though, doing things like edge detection, as this project demonstrates, are well in the realm of possibility.

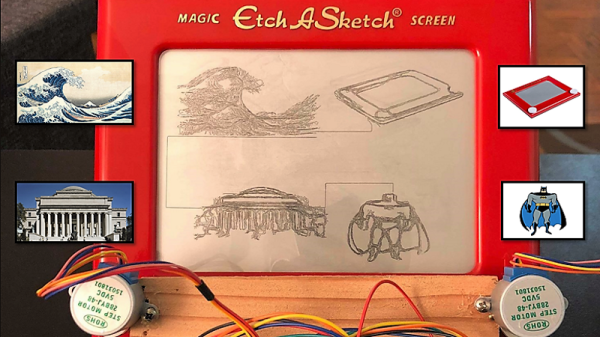

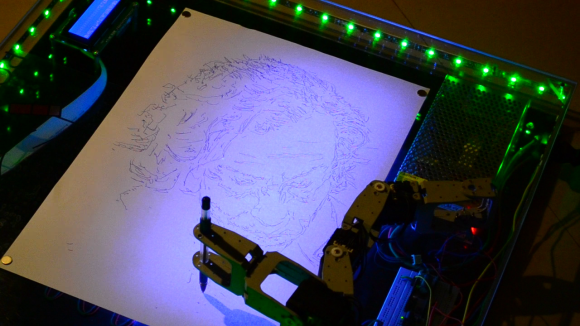

If running OpenCV on something as small as an ESP32 is possible, it is even easier to run on something orders of magnitude more powerful and yet still inexpensive, such as the Raspberry Pi. While the project’s code is available on its GitHub page for those interested, there are plenty of other OpenCV projects that we have featured on more powerful platforms as well, like this clock which falls off of the wall whenever someone looks at it.