You heard it here first: dash cams are going to be the next must-have item for your daily driver. Already reaching market saturation in some parts of the world but still fairly uncommon in North America, we predict that car makers will soon latch onto the trend and start equipping cars with dash cams as standard equipment. And you can just bet that whatever watered-down, overpriced feature set they come up with will be sure to disappoint, so you might want to think about building your own Raspberry Pi dash cam with an accelerometer and lots of LEDS.

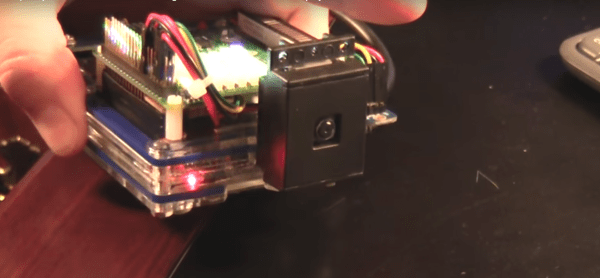

Still very much in the prototyping phase, [CFLanger]’s project is at its heart a dash cam, but it looks like he wants to go far beyond that. Raspivid and a PI NoIR camera take care of the video streaming, but the addition of a Pi SenseHAT gives [CFLanger] a bunch of options for sensing and recording the car’s environment. Not content with the SenseHAT’s onboard accelerometer, he added an ADXL345 to the sensor suite. The 64-pixel LED display is just for fun – it displays pitch and roll of the platform – and a yet-to-be-implemented bar-graph display will show acceleration in the X-axis. He figures the whole thing is good for a couple of days of video, but we hope he adds audio capture and perhaps ECU data from an OBDII-Bluetooth adapter.

We’ve seen surprisingly few DIY dash cams on Hackaday, at least so far. There has been a dash cam teardown and retasking, and there are plenty of dashboard computer builds, though. Seems like most hackers want that DIY self-driving car first.

Continue reading “Homebrew Dash Cam Enables Full Suite Of Sensors”

For the display, he’s using eight big vintage Burroughs B7971 Nixie Tubes. These aren’t easy to source, and current prices hover around $100 each if you can find them. The 170V DC needed to run each tube comes from a set of six

For the display, he’s using eight big vintage Burroughs B7971 Nixie Tubes. These aren’t easy to source, and current prices hover around $100 each if you can find them. The 170V DC needed to run each tube comes from a set of six