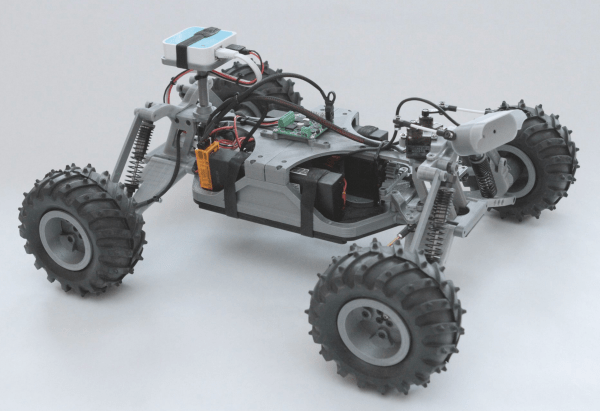

Robots are great in general, and [taylor] is currently working on something a bit unusual: a 3D printed explorer robot to autonomously follow outdoor trails, named Rover. Rover is still under development, and [taylor] recently completed the drive system and body designs, all shared via OnShape.

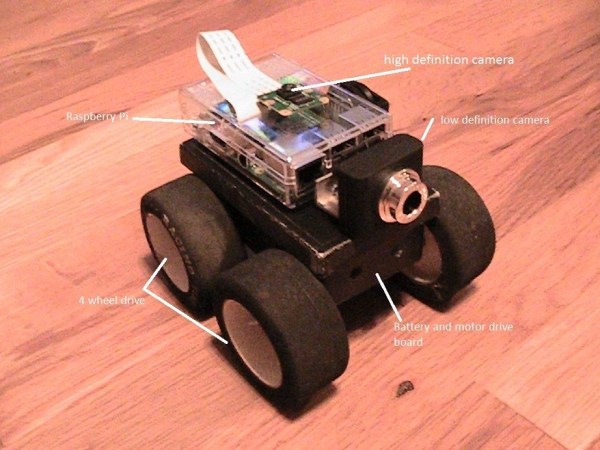

Rover has 3D printed 4.3:1 reduction planetary gearboxes embedded into each wheel, with off the shelf bearings and brushless motors. A Raspberry Pi sits in the driver’s seat, and the goal is to use a version of NVIDA’s TrailNet framework for GPS-free navigation of paths. As a result, [taylor] hopes to end up with a robotic “trail buddy” that can be made with off-the-shelf components and 3D printed parts.

Moving the motors and gearboxes into the wheels themselves makes for a very small main body to the robot, and it’s more than a bit strange to see the wheel spinning opposite to the wheel’s hub. Check out the video showcasing the latest development of the wheels, embedded below.

Continue reading “Gorgeous Engineering Inside Wheels Of A Robotic Trail Buddy”